Release ERNIE 2.0

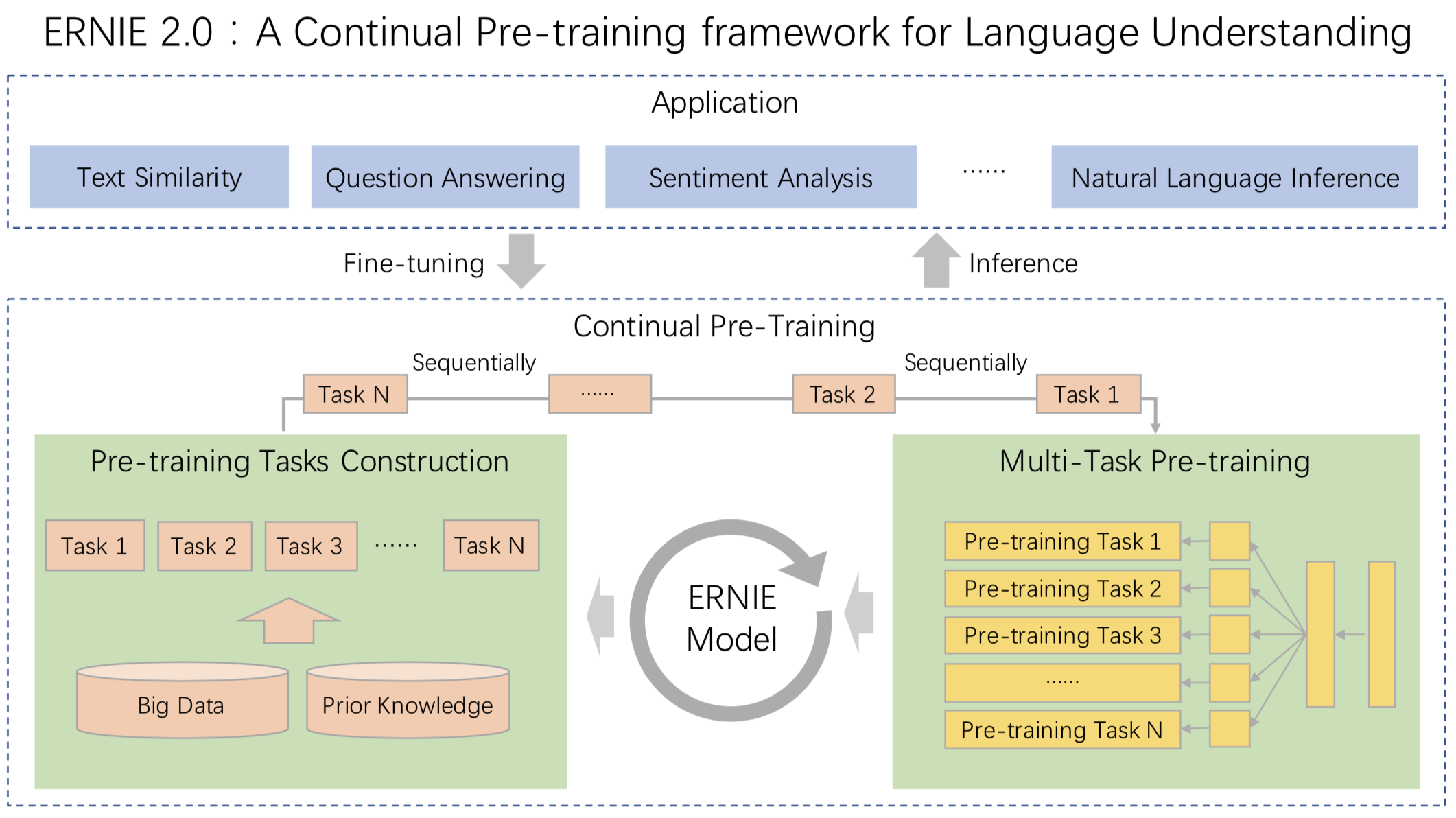

ERNIE 2.0 is a continual pre-training framework for language understanding in which pre-training tasks can be incrementally built and learned through multi-task learning

Showing

.metas/ernie2.0_arch.png

0 → 100644

303.4 KB

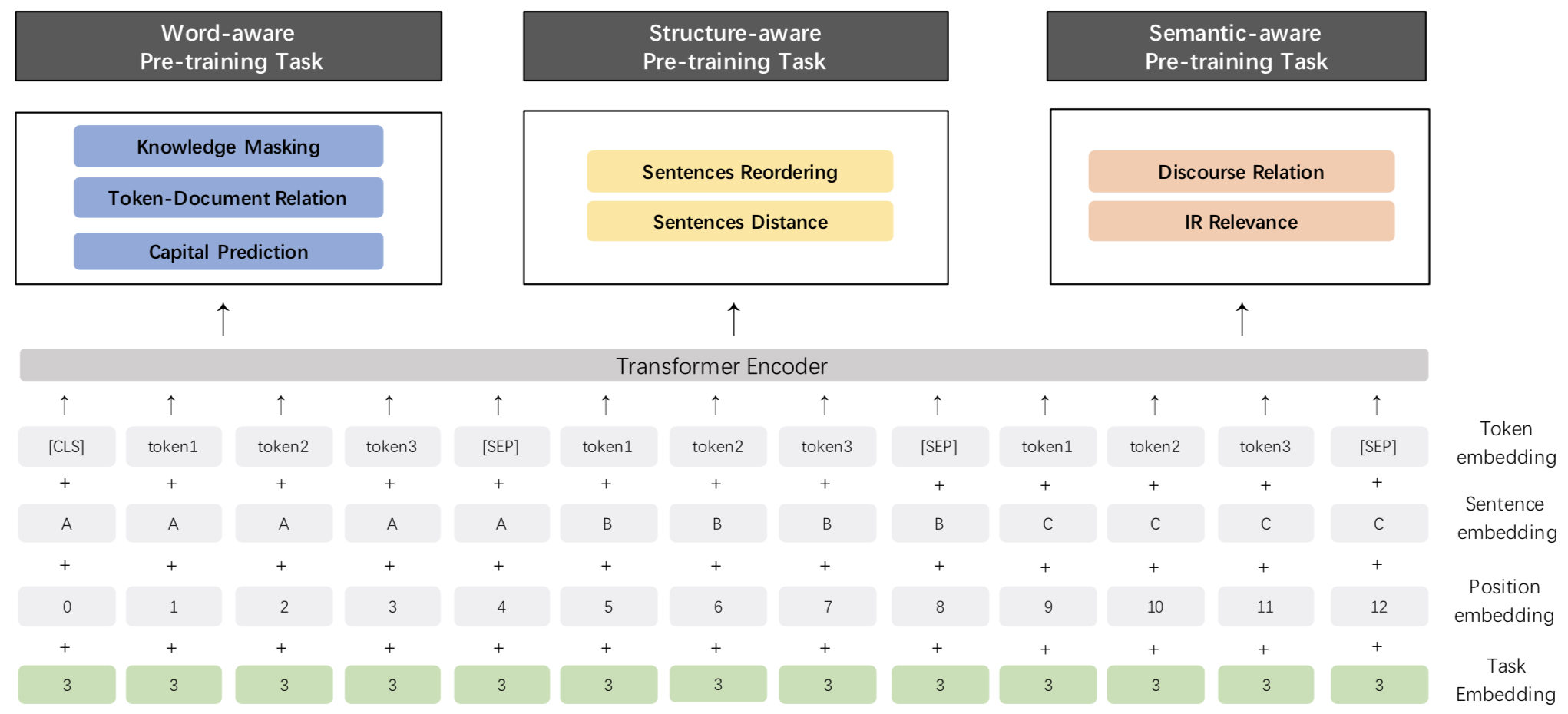

.metas/ernie2.0_model.png

0 → 100644

169.8 KB

README.zh.md

0 → 100644

classify_infer.py

0 → 100644

config/vocab_en.txt

0 → 100644

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

finetune/mrc.py

0 → 100644

model/ernie.py

0 → 100644

run_classifier.py

0 → 100644

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

script/en_glue/preprocess/cvt.sh

0 → 100644

script/en_glue/preprocess/mnli.py

0 → 100644

script/en_glue/preprocess/qnli.py

0 → 100644

utils/cmrc2018_eval.py

0 → 100644