diff --git a/.gitignore b/.gitignore

index cc8fff8770b97a3f31eb49270ad32ac25af30fad..ad8e74925d712f617305045bd9264744a9c462e2 100644

--- a/.gitignore

+++ b/.gitignore

@@ -2,6 +2,7 @@

*.pyc

.vscode

*log

+*.wav

*.pdmodel

*.pdiparams*

*.zip

@@ -13,6 +14,7 @@

*.whl

*.egg-info

build

+*output/

docs/build/

docs/topic/ctc/warp-ctc/

@@ -30,5 +32,6 @@ tools/OpenBLAS/

tools/Miniconda3-latest-Linux-x86_64.sh

tools/activate_python.sh

tools/miniconda.sh

+tools/CRF++-0.58/

-*output/

+speechx/fc_patch/

\ No newline at end of file

diff --git a/README.md b/README.md

index 46730797ba6c99549ddc178a4babd38875217768..46f492e998028485e0b1322d550eeb52eb49d0d7 100644

--- a/README.md

+++ b/README.md

@@ -148,6 +148,12 @@ For more synthesized audios, please refer to [PaddleSpeech Text-to-Speech sample

- [PaddleSpeech Demo Video](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

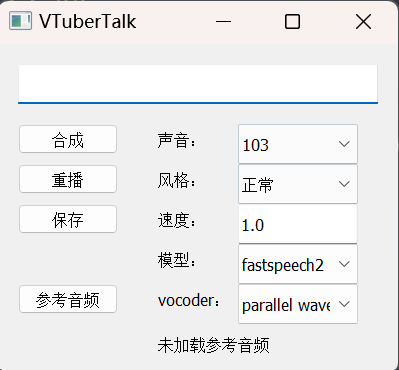

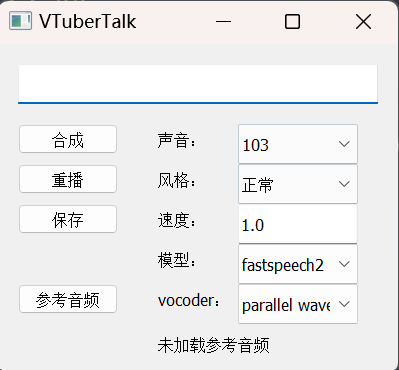

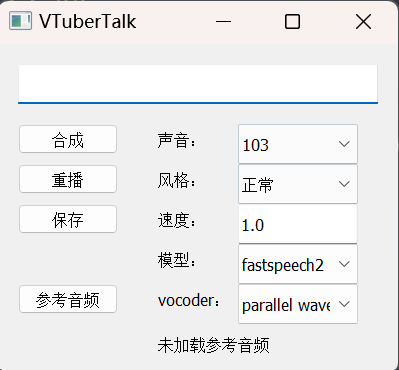

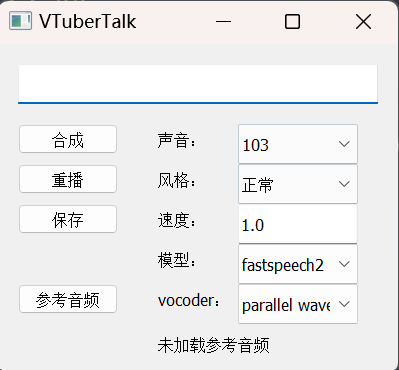

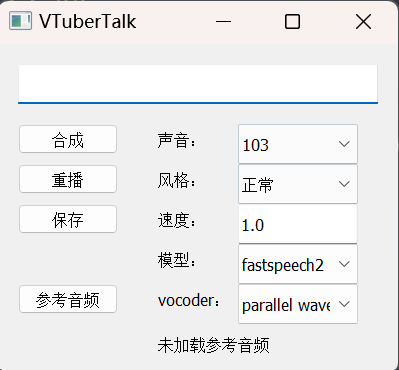

+- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

+

+

+

+

+

+

+

+ +

+ +

+