Merge branch 'develop' into datapipe

Showing

.bashrc

已删除

100644 → 0

.vimrc

已删除

100644 → 0

demos/asr_hub/README.md

0 → 100644

demos/asr_hub/hub_infer.py

0 → 100644

demos/asr_hub/run.sh

0 → 100755

demos/echo_hub/.gitignore

0 → 100644

demos/echo_hub/README.md

0 → 100644

demos/echo_hub/hub_infer.py

0 → 100644

demos/echo_hub/run.sh

0 → 100755

demos/metaverse/Lamarr.png

0 → 100644

441.0 KB

speechnn/env.sh

→

demos/metaverse/path.sh

100644 → 100755

demos/metaverse/run.sh

0 → 100755

demos/metaverse/sentences.txt

0 → 100644

demos/story_talker/imgs/000.jpg

0 → 100644

1.5 MB

demos/story_talker/ocr.py

0 → 100644

demos/story_talker/path.sh

0 → 100755

demos/story_talker/run.sh

0 → 100755

demos/story_talker/simfang.ttf

0 → 100644

文件已添加

demos/style_fs2/path.sh

0 → 100755

demos/style_fs2/run.sh

0 → 100755

demos/style_fs2/sentences.txt

0 → 100644

demos/style_fs2/style_syn.py

0 → 100644

demos/tts_hub/README.md

0 → 100644

demos/tts_hub/hub_infer.py

0 → 100644

demos/tts_hub/run.sh

0 → 100755

docs/source/dependencies.md

0 → 100644

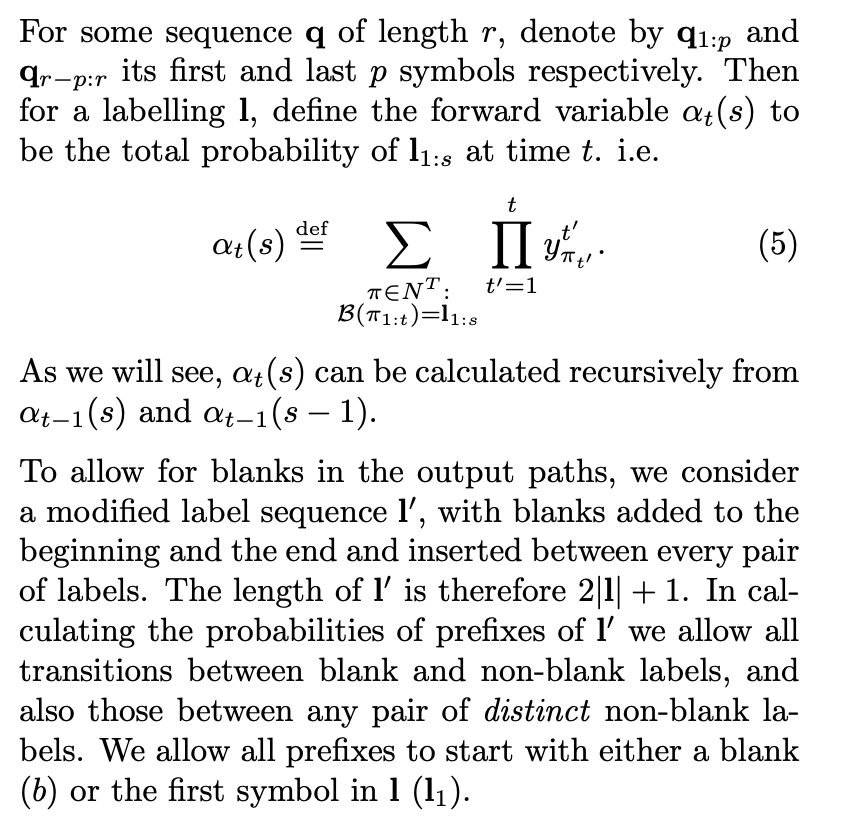

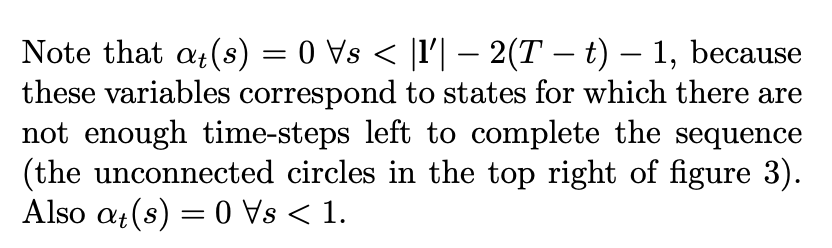

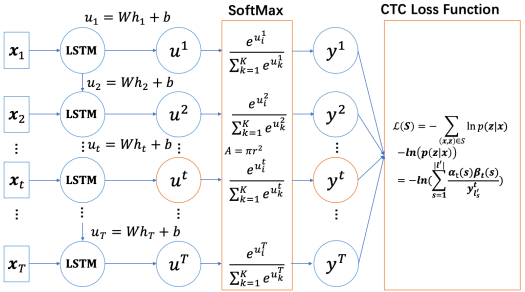

docs/topic/ctc/ctc_loss.ipynb

0 → 100644

162.1 KB

65.8 KB

50.6 KB

20.6 KB

50.4 KB

48.3 KB

114.8 KB

123.4 KB

112.6 KB

49.8 KB

31.3 KB

111.5 KB

223.1 KB

140.1 KB

107.9 KB

125.5 KB

46.7 KB

117.1 KB

1.5 MB

docs/tutorial/tts/source/ocr.wav

0 → 100644

文件已添加

107.5 KB

224.2 KB

1.5 MB

581.2 KB

文件已添加

367.9 KB

examples/aishell3/vc1/README.md

0 → 100644

examples/aishell3/vc1/path.sh

0 → 100755

examples/aishell3/vc1/run.sh

0 → 100755

examples/aishell3/voc1/README.md

0 → 100644

examples/aishell3/voc1/path.sh

0 → 100755

examples/aishell3/voc1/run.sh

0 → 100755

examples/csmsc/voc3/finetune.sh

0 → 100755

文件已移动

examples/other/g2p/run.sh

0 → 100755

examples/other/tn/README.md

0 → 100644

文件已移动

文件已移动

文件已移动

paddlespeech/cls/__init__.py

0 → 100644

paddlespeech/vector/__init__.py

0 → 100644

文件已移动

文件已移动

文件已移动

文件已移动

| ConfigArgParse | ConfigArgParse | ||

| coverage | coverage | ||

| distro | |||

| editdistance | editdistance | ||

| g2p_en | g2p_en | ||

| g2pM | g2pM | ||

| gpustat | gpustat | ||

| GPUtil | |||

| h5py | h5py | ||

| inflect | inflect | ||

| jieba | jieba | ||

| ... | @@ -16,30 +18,35 @@ matplotlib | ... | @@ -16,30 +18,35 @@ matplotlib |

| nara_wpe | nara_wpe | ||

| nltk | nltk | ||

| numba | numba | ||

| numpy==1.20.0 | paddlespeech_ctcdecoders | ||

| paddlespeech_feat | |||

| pandas | pandas | ||

| phkit | phkit | ||

| Pillow | Pillow | ||

| praatio~=4.1 | praatio~=4.1 | ||

| pre-commit | pre-commit | ||

| psutil | |||

| pybind11 | pybind11 | ||

| pynvml | |||

| pypi-kenlm | |||

| pypinyin | pypinyin | ||

| python-dateutil | python-dateutil | ||

| pyworld | pyworld | ||

| resampy==0.2.2 | resampy==0.2.2 | ||

| sacrebleu | sacrebleu | ||

| scipy==1.2.1 | scipy | ||

| sentencepiece | sentencepiece~=0.1.96 | ||

| snakeviz | snakeviz | ||

| soundfile~=0.10 | soundfile~=0.10 | ||

| sox | sox | ||

| soxbindings | |||

| tensorboardX | tensorboardX | ||

| textgrid | textgrid | ||

| timer | timer | ||

| tqdm | tqdm | ||

| typeguard | typeguard | ||

| unidecode | unidecode | ||

| visualdl==2.2.0 | visualdl | ||

| webrtcvad | webrtcvad | ||

| yacs | yacs | ||

| yq | yq |

setup.sh

0 → 100644

speechnn/.gitignore

已删除

100644 → 0

speechnn/CMakeLists.txt

已删除

100644 → 0

speechnn/examples/.gitkeep

已删除

100644 → 0

tools/extras/install_mfa_v1.sh

0 → 100755

tools/extras/install_sclite.sh

0 → 100755

tools/extras/install_sox.sh

0 → 100755

tools/extras/install_venv.sh

0 → 100755