diff --git a/.gitignore b/.gitignore

index cc8fff8770b97a3f31eb49270ad32ac25af30fad..778824f5e8a3c655cea60c81f259625da45dd40f 100644

--- a/.gitignore

+++ b/.gitignore

@@ -2,6 +2,7 @@

*.pyc

.vscode

*log

+*.wav

*.pdmodel

*.pdiparams*

*.zip

@@ -30,5 +31,8 @@ tools/OpenBLAS/

tools/Miniconda3-latest-Linux-x86_64.sh

tools/activate_python.sh

tools/miniconda.sh

+tools/CRF++-0.58/

+

+speechx/fc_patch/

*output/

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

index 60f0b92f6025d78908cf5043161c6b21771aaa95..7fb01708a3de083c368031e7353fd35e2455788a 100644

--- a/.pre-commit-config.yaml

+++ b/.pre-commit-config.yaml

@@ -50,12 +50,13 @@ repos:

entry: bash .pre-commit-hooks/clang-format.hook -i

language: system

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|cuh|proto)$

+ exclude: (?=speechx/speechx/kaldi).*(\.cpp|\.cc|\.h|\.py)$

- id: copyright_checker

name: copyright_checker

entry: python .pre-commit-hooks/copyright-check.hook

language: system

files: \.(c|cc|cxx|cpp|cu|h|hpp|hxx|proto|py)$

- exclude: (?=third_party|pypinyin).*(\.cpp|\.h|\.py)$

+ exclude: (?=third_party|pypinyin|speechx/speechx/kaldi).*(\.cpp|\.cc|\.h|\.py)$

- repo: https://github.com/asottile/reorder_python_imports

rev: v2.4.0

hooks:

diff --git a/README.md b/README.md

index 46730797ba6c99549ddc178a4babd38875217768..46f492e998028485e0b1322d550eeb52eb49d0d7 100644

--- a/README.md

+++ b/README.md

@@ -148,6 +148,12 @@ For more synthesized audios, please refer to [PaddleSpeech Text-to-Speech sample

- [PaddleSpeech Demo Video](https://paddlespeech.readthedocs.io/en/latest/demo_video.html)

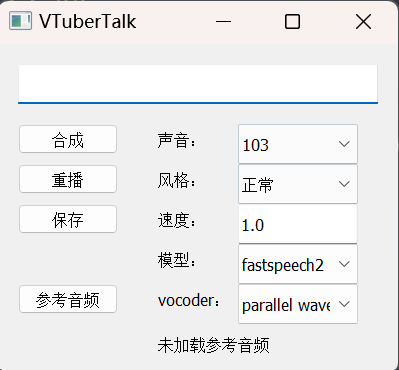

+- **[VTuberTalk](https://github.com/jerryuhoo/VTuberTalk): Use PaddleSpeech TTS and ASR to clone voice from videos.**

+

+

+

+

+

+

+

+ +

+ +

+