!15528 翻译完成:15272+14407+15018+14814+14539+14658+14627+14642+15283 media API+文件夹更新

Merge pull request !15528 from wusongqing/TR15272

Showing

| W: | H:

| W: | H:

Merge pull request !15528 from wusongqing/TR15272

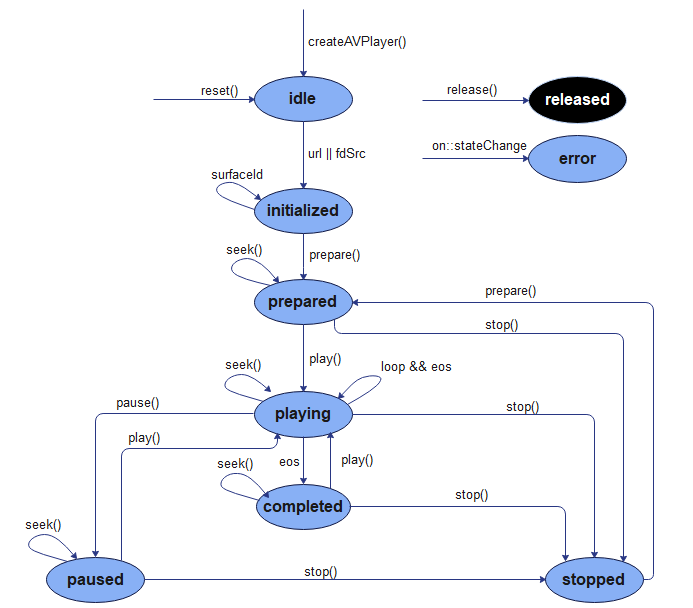

37.5 KB | W: | H:

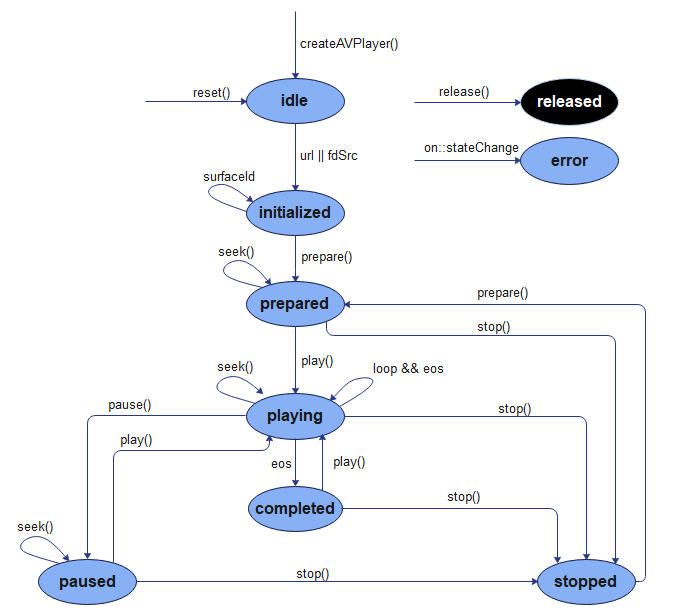

36.3 KB | W: | H: