diff --git a/README.md b/README.md

index 1ce6fabb55e231a7748bf2baeefc2d2fb4ffb2e5..a5c52bdb356fa765931fa7046aca5e4ef72caa95 100644

--- a/README.md

+++ b/README.md

@@ -93,7 +93,7 @@ For a new language request, please refer to [Guideline for new language_requests

- [Quick Inference Based on PIP](./doc/doc_en/whl_en.md)

- [Python Inference](./doc/doc_en/inference_en.md)

- [C++ Inference](./deploy/cpp_infer/readme_en.md)

- - [Serving](./deploy/hubserving/readme_en.md)

+ - [Serving](./deploy/pdserving/README.md)

- [Mobile](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/deploy/lite/readme_en.md)

- [Benchmark](./doc/doc_en/benchmark_en.md)

- Data Annotation and Synthesis

diff --git a/README_ch.md b/README_ch.md

index 9fe853f92510e540e35b7452f6ab00e5898ddb55..0430fe759f62155ad97d73db06445bbfe551c181 100755

--- a/README_ch.md

+++ b/README_ch.md

@@ -8,9 +8,10 @@ PaddleOCR同时支持动态图与静态图两种编程范式

- 静态图版本:develop分支

**近期更新**

+- 【预告】 PaddleOCR研发团队对最新发版内容技术深入解读,4月13日晚上19:00,[直播地址](https://live.bilibili.com/21689802)

+- 2021.4.8 release 2.1版本,新增AAAI 2021论文[端到端识别算法PGNet](./doc/doc_ch/pgnet.md)开源,[多语言模型](./doc/doc_ch/multi_languages.md)支持种类增加到80+。

- 2021.2.1 [FAQ](./doc/doc_ch/FAQ.md)新增5个高频问题,总数162个,每周一都会更新,欢迎大家持续关注。

-- 2021.1.26,28,29 PaddleOCR官方研发团队带来技术深入解读三日直播课,1月26日、28日、29日晚上19:30,[直播地址](https://live.bilibili.com/21689802)

-- 2021.1.21 更新多语言识别模型,目前支持语种超过27种,[多语言模型下载](./doc/doc_ch/models_list.md),包括中文简体、中文繁体、英文、法文、德文、韩文、日文、意大利文、西班牙文、葡萄牙文、俄罗斯文、阿拉伯文等,后续计划可以参考[多语言研发计划](https://github.com/PaddlePaddle/PaddleOCR/issues/1048)

+- 2021.1.21 更新多语言识别模型,目前支持语种超过27种,包括中文简体、中文繁体、英文、法文、德文、韩文、日文、意大利文、西班牙文、葡萄牙文、俄罗斯文、阿拉伯文等,后续计划可以参考[多语言研发计划](https://github.com/PaddlePaddle/PaddleOCR/issues/1048)

- 2020.12.15 更新数据合成工具[Style-Text](./StyleText/README_ch.md),可以批量合成大量与目标场景类似的图像,在多个场景验证,效果明显提升。

- 2020.11.25 更新半自动标注工具[PPOCRLabel](./PPOCRLabel/README_ch.md),辅助开发者高效完成标注任务,输出格式与PP-OCR训练任务完美衔接。

- 2020.9.22 更新PP-OCR技术文章,https://arxiv.org/abs/2009.09941

@@ -74,11 +75,13 @@ PaddleOCR同时支持动态图与静态图两种编程范式

## 文档教程

- [快速安装](./doc/doc_ch/installation.md)

- [中文OCR模型快速使用](./doc/doc_ch/quickstart.md)

+- [多语言OCR模型快速使用](./doc/doc_ch/multi_languages.md)

- [代码组织结构](./doc/doc_ch/tree.md)

- 算法介绍

- [文本检测](./doc/doc_ch/algorithm_overview.md)

- [文本识别](./doc/doc_ch/algorithm_overview.md)

- [PP-OCR Pipline](#PP-OCR)

+ - [端到端PGNet算法](./doc/doc_ch/pgnet.md)

- 模型训练/评估

- [文本检测](./doc/doc_ch/detection.md)

- [文本识别](./doc/doc_ch/recognition.md)

@@ -88,7 +91,7 @@ PaddleOCR同时支持动态图与静态图两种编程范式

- [基于pip安装whl包快速推理](./doc/doc_ch/whl.md)

- [基于Python脚本预测引擎推理](./doc/doc_ch/inference.md)

- [基于C++预测引擎推理](./deploy/cpp_infer/readme.md)

- - [服务化部署](./deploy/hubserving/readme.md)

+ - [服务化部署](./deploy/pdserving/README_CN.md)

- [端侧部署](https://github.com/PaddlePaddle/PaddleOCR/blob/develop/deploy/lite/readme.md)

- [Benchmark](./doc/doc_ch/benchmark.md)

- 数据集

diff --git a/configs/det/det_r50_vd_sast_icdar15.yml b/configs/det/det_r50_vd_sast_icdar15.yml

index c24cae90132c68d662e9edb7a7975e358fb40d9c..c90327b22b9c73111c997e84cfdd47d0721ee5b9 100755

--- a/configs/det/det_r50_vd_sast_icdar15.yml

+++ b/configs/det/det_r50_vd_sast_icdar15.yml

@@ -14,12 +14,13 @@ Global:

load_static_weights: True

cal_metric_during_train: False

pretrained_model: ./pretrain_models/ResNet50_vd_ssld_pretrained/

- checkpoints:

+ checkpoints:

save_inference_dir:

use_visualdl: False

- infer_img:

+ infer_img:

save_res_path: ./output/sast_r50_vd_ic15/predicts_sast.txt

+

Architecture:

model_type: det

algorithm: SAST

diff --git a/configs/e2e/e2e_r50_vd_pg.yml b/configs/e2e/e2e_r50_vd_pg.yml

new file mode 100644

index 0000000000000000000000000000000000000000..e4d868f98b5847fa064e14f87a69932806791320

--- /dev/null

+++ b/configs/e2e/e2e_r50_vd_pg.yml

@@ -0,0 +1,116 @@

+Global:

+ use_gpu: True

+ epoch_num: 600

+ log_smooth_window: 20

+ print_batch_step: 10

+ save_model_dir: ./output/pgnet_r50_vd_totaltext/

+ save_epoch_step: 10

+ # evaluation is run every 0 iterationss after the 1000th iteration

+ eval_batch_step: [ 0, 1000 ]

+ # 1. If pretrained_model is saved in static mode, such as classification pretrained model

+ # from static branch, load_static_weights must be set as True.

+ # 2. If you want to finetune the pretrained models we provide in the docs,

+ # you should set load_static_weights as False.

+ load_static_weights: False

+ cal_metric_during_train: False

+ pretrained_model:

+ checkpoints:

+ save_inference_dir:

+ use_visualdl: False

+ infer_img:

+ valid_set: totaltext # two mode: totaltext valid curved words, partvgg valid non-curved words

+ save_res_path: ./output/pgnet_r50_vd_totaltext/predicts_pgnet.txt

+ character_dict_path: ppocr/utils/ic15_dict.txt

+ character_type: EN

+ max_text_length: 50 # the max length in seq

+ max_text_nums: 30 # the max seq nums in a pic

+ tcl_len: 64

+

+Architecture:

+ model_type: e2e

+ algorithm: PGNet

+ Transform:

+ Backbone:

+ name: ResNet

+ layers: 50

+ Neck:

+ name: PGFPN

+ Head:

+ name: PGHead

+

+Loss:

+ name: PGLoss

+ tcl_bs: 64

+ max_text_length: 50 # the same as Global: max_text_length

+ max_text_nums: 30 # the same as Global:max_text_nums

+ pad_num: 36 # the length of dict for pad

+

+Optimizer:

+ name: Adam

+ beta1: 0.9

+ beta2: 0.999

+ lr:

+ learning_rate: 0.001

+ regularizer:

+ name: 'L2'

+ factor: 0

+

+

+PostProcess:

+ name: PGPostProcess

+ score_thresh: 0.5

+ mode: fast # fast or slow two ways

+Metric:

+ name: E2EMetric

+ gt_mat_dir: # the dir of gt_mat

+ character_dict_path: ppocr/utils/ic15_dict.txt

+ main_indicator: f_score_e2e

+

+Train:

+ dataset:

+ name: PGDataSet

+ label_file_list: [.././train_data/total_text/train/]

+ ratio_list: [1.0]

+ data_format: icdar #two data format: icdar/textnet

+ transforms:

+ - DecodeImage: # load image

+ img_mode: BGR

+ channel_first: False

+ - PGProcessTrain:

+ batch_size: 14 # same as loader: batch_size_per_card

+ min_crop_size: 24

+ min_text_size: 4

+ max_text_size: 512

+ - KeepKeys:

+ keep_keys: [ 'images', 'tcl_maps', 'tcl_label_maps', 'border_maps','direction_maps', 'training_masks', 'label_list', 'pos_list', 'pos_mask' ] # dataloader will return list in this order

+ loader:

+ shuffle: True

+ drop_last: True

+ batch_size_per_card: 14

+ num_workers: 16

+

+Eval:

+ dataset:

+ name: PGDataSet

+ data_dir: ./train_data/

+ label_file_list: [./train_data/total_text/test/]

+ transforms:

+ - DecodeImage: # load image

+ img_mode: RGB

+ channel_first: False

+ - E2ELabelEncode:

+ - E2EResizeForTest:

+ max_side_len: 768

+ - NormalizeImage:

+ scale: 1./255.

+ mean: [ 0.485, 0.456, 0.406 ]

+ std: [ 0.229, 0.224, 0.225 ]

+ order: 'hwc'

+ - ToCHWImage:

+ - KeepKeys:

+ keep_keys: [ 'image', 'shape', 'polys', 'strs', 'tags', 'img_id']

+ loader:

+ shuffle: False

+ drop_last: False

+ batch_size_per_card: 1 # must be 1

+ num_workers: 2

\ No newline at end of file

diff --git a/deploy/hubserving/readme.md b/deploy/hubserving/readme.md

index ce55b0f0da42b706cef30ab9b7a4c06f02e7c8eb..88f335812de191d860e46f7317bb303a20df8b41 100755

--- a/deploy/hubserving/readme.md

+++ b/deploy/hubserving/readme.md

@@ -2,7 +2,7 @@

PaddleOCR提供2种服务部署方式:

- 基于PaddleHub Serving的部署:代码路径为"`./deploy/hubserving`",按照本教程使用;

-- (coming soon)基于PaddleServing的部署:代码路径为"`./deploy/pdserving`",使用方法参考[文档](../../deploy/pdserving/readme.md)。

+- 基于PaddleServing的部署:代码路径为"`./deploy/pdserving`",使用方法参考[文档](../../deploy/pdserving/README_CN.md)。

# 基于PaddleHub Serving的服务部署

diff --git a/deploy/hubserving/readme_en.md b/deploy/hubserving/readme_en.md

index 95223ffd82f8264d56158e8e7917c983b07f679d..c948fed1eefe9f5f83f63a82699cdac3548fad52 100755

--- a/deploy/hubserving/readme_en.md

+++ b/deploy/hubserving/readme_en.md

@@ -2,7 +2,7 @@ English | [简体中文](readme.md)

PaddleOCR provides 2 service deployment methods:

- Based on **PaddleHub Serving**: Code path is "`./deploy/hubserving`". Please follow this tutorial.

-- (coming soon)Based on **PaddleServing**: Code path is "`./deploy/pdserving`". Please refer to the [tutorial](../../deploy/pdserving/readme.md) for usage.

+- Based on **PaddleServing**: Code path is "`./deploy/pdserving`". Please refer to the [tutorial](../../deploy/pdserving/README.md) for usage.

# Service deployment based on PaddleHub Serving

diff --git a/deploy/pdserving/README.md b/deploy/pdserving/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..88426ba9c508a4020af0a6203010d683cb73eba9

--- /dev/null

+++ b/deploy/pdserving/README.md

@@ -0,0 +1,158 @@

+# OCR Pipeline WebService

+

+(English|[简体中文](./README_CN.md))

+

+PaddleOCR provides two service deployment methods:

+- Based on **PaddleHub Serving**: Code path is "`./deploy/hubserving`". Please refer to the [tutorial](../../deploy/hubserving/readme_en.md)

+- Based on **PaddleServing**: Code path is "`./deploy/pdserving`". Please follow this tutorial.

+

+# Service deployment based on PaddleServing

+

+This document will introduce how to use the [PaddleServing](https://github.com/PaddlePaddle/Serving/blob/develop/README.md) to deploy the PPOCR dynamic graph model as a pipeline online service.

+

+Some Key Features of Paddle Serving:

+- Integrate with Paddle training pipeline seamlessly, most paddle models can be deployed with one line command.

+- Industrial serving features supported, such as models management, online loading, online A/B testing etc.

+- Highly concurrent and efficient communication between clients and servers supported.

+

+The introduction and tutorial of Paddle Serving service deployment framework reference [document](https://github.com/PaddlePaddle/Serving/blob/develop/README.md).

+

+

+## Contents

+- [Environmental preparation](#environmental-preparation)

+- [Model conversion](#model-conversion)

+- [Paddle Serving pipeline deployment](#paddle-serving-pipeline-deployment)

+- [FAQ](#faq)

+

+

+## Environmental preparation

+

+PaddleOCR operating environment and Paddle Serving operating environment are needed.

+

+1. Please prepare PaddleOCR operating environment reference [link](../../doc/doc_ch/installation.md).

+

+2. The steps of PaddleServing operating environment prepare are as follows:

+

+ Install serving which used to start the service

+ ```

+ pip3 install paddle-serving-server==0.5.0 # for CPU

+ pip3 install paddle-serving-server-gpu==0.5.0 # for GPU

+ # Other GPU environments need to confirm the environment and then choose to execute the following commands

+ pip3 install paddle-serving-server-gpu==0.5.0.post9 # GPU with CUDA9.0

+ pip3 install paddle-serving-server-gpu==0.5.0.post10 # GPU with CUDA10.0

+ pip3 install paddle-serving-server-gpu==0.5.0.post101 # GPU with CUDA10.1 + TensorRT6

+ pip3 install paddle-serving-server-gpu==0.5.0.post11 # GPU with CUDA10.1 + TensorRT7

+ ```

+

+3. Install the client to send requests to the service

+ ```

+ pip3 install paddle-serving-client==0.5.0 # for CPU

+

+ pip3 install paddle-serving-client-gpu==0.5.0 # for GPU

+ ```

+

+4. Install serving-app

+ ```

+ pip3 install paddle-serving-app==0.3.0

+ # fix local_predict to support load dynamic model

+ # find the install directoory of paddle_serving_app

+ vim /usr/local/lib/python3.7/site-packages/paddle_serving_app/local_predict.py

+ # replace line 85 of local_predict.py config = AnalysisConfig(model_path) with:

+ if os.path.exists(os.path.join(model_path, "__params__")):

+ config = AnalysisConfig(os.path.join(model_path, "__model__"), os.path.join(model_path, "__params__"))

+ else:

+ config = AnalysisConfig(model_path)

+ ```

+

+ **note:** If you want to install the latest version of PaddleServing, refer to [link](https://github.com/PaddlePaddle/Serving/blob/develop/doc/LATEST_PACKAGES.md).

+

+

+

+## Model conversion

+When using PaddleServing for service deployment, you need to convert the saved inference model into a serving model that is easy to deploy.

+

+Firstly, download the [inference model](https://github.com/PaddlePaddle/PaddleOCR#pp-ocr-20-series-model-listupdate-on-dec-15) of PPOCR

+```

+# Download and unzip the OCR text detection model

+wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_infer.tar && tar xf ch_ppocr_server_v2.0_det_infer.tar

+# Download and unzip the OCR text recognition model

+wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_infer.tar && tar xf ch_ppocr_server_v2.0_rec_infer.tar

+

+```

+Then, you can use installed paddle_serving_client tool to convert inference model to server model.

+```

+# Detection model conversion

+python3 -m paddle_serving_client.convert --dirname ./ch_ppocr_server_v2.0_det_infer/ \

+ --model_filename inference.pdmodel \

+ --params_filename inference.pdiparams \

+ --serving_server ./ppocr_det_server_2.0_serving/ \

+ --serving_client ./ppocr_det_server_2.0_client/

+

+# Recognition model conversion

+python3 -m paddle_serving_client.convert --dirname ./ch_ppocr_server_v2.0_rec_infer/ \

+ --model_filename inference.pdmodel \

+ --params_filename inference.pdiparams \

+ --serving_server ./ppocr_rec_server_2.0_serving/ \

+ --serving_client ./ppocr_rec_server_2.0_client/

+

+```

+

+After the detection model is converted, there will be additional folders of `ppocr_det_server_2.0_serving` and `ppocr_det_server_2.0_client` in the current folder, with the following format:

+```

+|- ppocr_det_server_2.0_serving/

+ |- __model__

+ |- __params__

+ |- serving_server_conf.prototxt

+ |- serving_server_conf.stream.prototxt

+

+|- ppocr_det_server_2.0_client

+ |- serving_client_conf.prototxt

+ |- serving_client_conf.stream.prototxt

+

+```

+The recognition model is the same.

+

+

+## Paddle Serving pipeline deployment

+

+1. Download the PaddleOCR code, if you have already downloaded it, you can skip this step.

+ ```

+ git clone https://github.com/PaddlePaddle/PaddleOCR

+

+ # Enter the working directory

+ cd PaddleOCR/deploy/pdserver/

+ ```

+

+ The pdserver directory contains the code to start the pipeline service and send prediction requests, including:

+ ```

+ __init__.py

+ config.yml # Start the service configuration file

+ ocr_reader.py # OCR model pre-processing and post-processing code implementation

+ pipeline_http_client.py # Script to send pipeline prediction request

+ web_service.py # Start the script of the pipeline server

+ ```

+

+2. Run the following command to start the service.

+ ```

+ # Start the service and save the running log in log.txt

+ python3 web_service.py &>log.txt &

+ ```

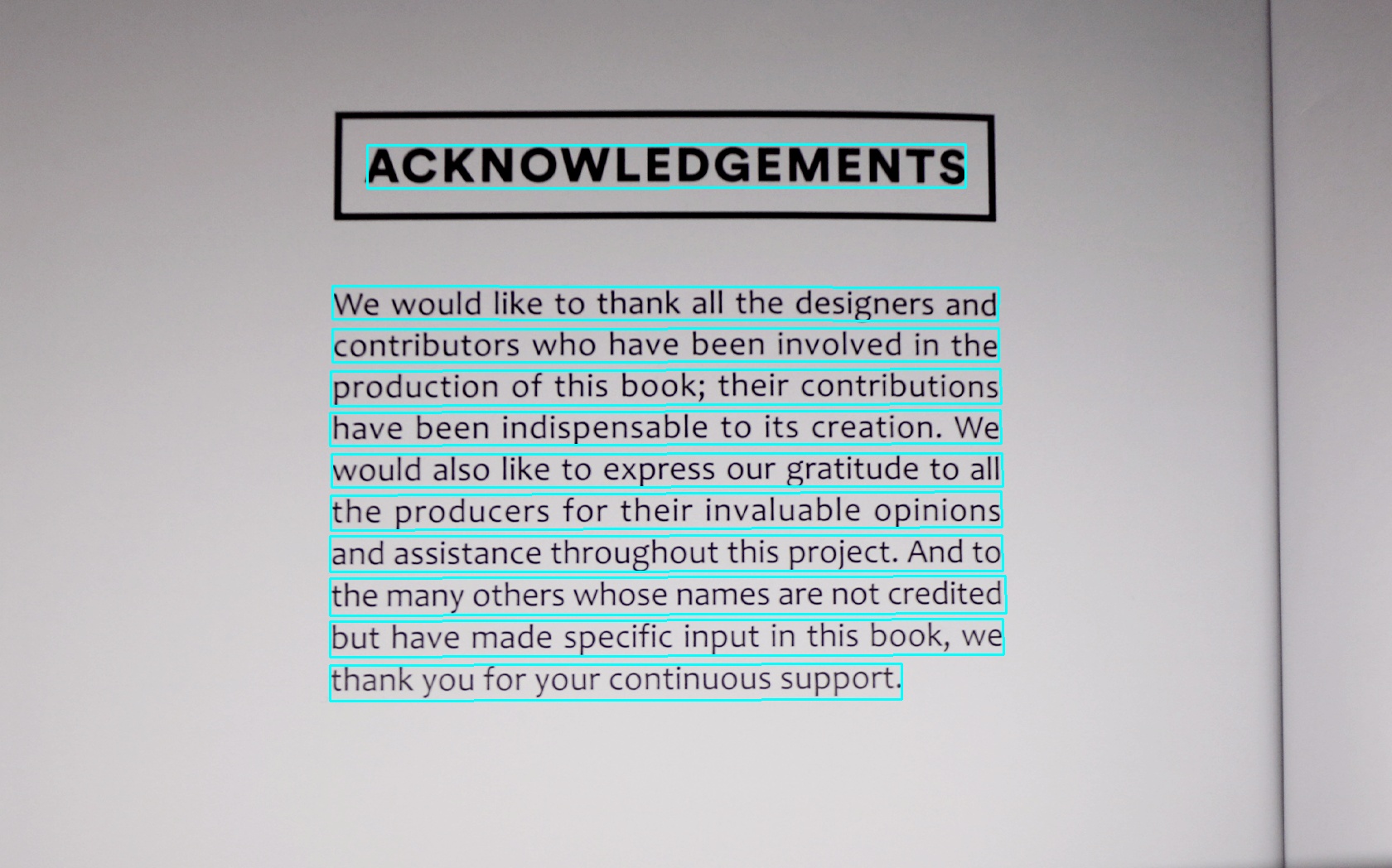

+ After the service is successfully started, a log similar to the following will be printed in log.txt

+

+

+3. Send service request

+ ```

+ python3 pipeline_http_client.py

+ ```

+ After successfully running, the predicted result of the model will be printed in the cmd window. An example of the result is:

+

+

+

+## FAQ

+**Q1**: No result return after sending the request.

+

+**A1**: Do not set the proxy when starting the service and sending the request. You can close the proxy before starting the service and before sending the request. The command to close the proxy is:

+```

+unset https_proxy

+unset http_proxy

+```

diff --git a/deploy/pdserving/README_CN.md b/deploy/pdserving/README_CN.md

new file mode 100644

index 0000000000000000000000000000000000000000..3e3f1bde0e824fe6133a1c169b9b03e614904c26

--- /dev/null

+++ b/deploy/pdserving/README_CN.md

@@ -0,0 +1,160 @@

+# PPOCR 服务化部署

+

+([English](./README.md)|简体中文)

+

+PaddleOCR提供2种服务部署方式:

+- 基于PaddleHub Serving的部署:代码路径为"`./deploy/hubserving`",使用方法参考[文档](../../deploy/hubserving/readme.md);

+- 基于PaddleServing的部署:代码路径为"`./deploy/pdserving`",按照本教程使用。

+

+# 基于PaddleServing的服务部署

+

+本文档将介绍如何使用[PaddleServing](https://github.com/PaddlePaddle/Serving/blob/develop/README_CN.md)工具部署PPOCR

+动态图模型的pipeline在线服务。

+

+相比较于hubserving部署,PaddleServing具备以下优点:

+- 支持客户端和服务端之间高并发和高效通信

+- 支持 工业级的服务能力 例如模型管理,在线加载,在线A/B测试等

+- 支持 多种编程语言 开发客户端,例如C++, Python和Java

+

+更多有关PaddleServing服务化部署框架介绍和使用教程参考[文档](https://github.com/PaddlePaddle/Serving/blob/develop/README_CN.md)。

+

+## 目录

+- [环境准备](#环境准备)

+- [模型转换](#模型转换)

+- [Paddle Serving pipeline部署](#部署)

+- [FAQ](#FAQ)

+

+

+## 环境准备

+

+需要准备PaddleOCR的运行环境和Paddle Serving的运行环境。

+

+- 准备PaddleOCR的运行环境参考[链接](../../doc/doc_ch/installation.md)

+

+- 准备PaddleServing的运行环境,步骤如下

+

+1. 安装serving,用于启动服务

+ ```

+ pip3 install paddle-serving-server==0.5.0 # for CPU

+ pip3 install paddle-serving-server-gpu==0.5.0 # for GPU

+ # 其他GPU环境需要确认环境再选择执行如下命令

+ pip3 install paddle-serving-server-gpu==0.5.0.post9 # GPU with CUDA9.0

+ pip3 install paddle-serving-server-gpu==0.5.0.post10 # GPU with CUDA10.0

+ pip3 install paddle-serving-server-gpu==0.5.0.post101 # GPU with CUDA10.1 + TensorRT6

+ pip3 install paddle-serving-server-gpu==0.5.0.post11 # GPU with CUDA10.1 + TensorRT7

+ ```

+

+2. 安装client,用于向服务发送请求

+ ```

+ pip3 install paddle-serving-client==0.5.0 # for CPU

+

+ pip3 install paddle-serving-client-gpu==0.5.0 # for GPU

+ ```

+

+3. 安装serving-app

+ ```

+ pip3 install paddle-serving-app==0.3.0

+ ```

+ **note:** 安装0.3.0版本的serving-app后,为了能加载动态图模型,需要修改serving_app的源码,具体为:

+ ```

+ # 找到paddle_serving_app的安装目录,找到并编辑local_predict.py文件

+ vim /usr/local/lib/python3.7/site-packages/paddle_serving_app/local_predict.py

+ # 将local_predict.py 的第85行 config = AnalysisConfig(model_path) 替换为:

+ if os.path.exists(os.path.join(model_path, "__params__")):

+ config = AnalysisConfig(os.path.join(model_path, "__model__"), os.path.join(model_path, "__params__"))

+ else:

+ config = AnalysisConfig(model_path)

+ ```

+

+ **Note:** 如果要安装最新版本的PaddleServing参考[链接](https://github.com/PaddlePaddle/Serving/blob/develop/doc/LATEST_PACKAGES.md)。

+

+

+## 模型转换

+

+使用PaddleServing做服务化部署时,需要将保存的inference模型转换为serving易于部署的模型。

+

+首先,下载PPOCR的[inference模型](https://github.com/PaddlePaddle/PaddleOCR#pp-ocr-20-series-model-listupdate-on-dec-15)

+```

+# 下载并解压 OCR 文本检测模型

+wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_infer.tar && tar xf ch_ppocr_server_v2.0_det_infer.tar

+# 下载并解压 OCR 文本识别模型

+wget https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_infer.tar && tar xf ch_ppocr_server_v2.0_rec_infer.tar

+```

+

+接下来,用安装的paddle_serving_client把下载的inference模型转换成易于server部署的模型格式。

+

+```

+# 转换检测模型

+python3 -m paddle_serving_client.convert --dirname ./ch_ppocr_server_v2.0_det_infer/ \

+ --model_filename inference.pdmodel \

+ --params_filename inference.pdiparams \

+ --serving_server ./ppocr_det_server_2.0_serving/ \

+ --serving_client ./ppocr_det_server_2.0_client/

+

+# 转换识别模型

+python3 -m paddle_serving_client.convert --dirname ./ch_ppocr_server_v2.0_rec_infer/ \

+ --model_filename inference.pdmodel \

+ --params_filename inference.pdiparams \

+ --serving_server ./ppocr_rec_server_2.0_serving/ \

+ --serving_client ./ppocr_rec_server_2.0_client/

+```

+

+检测模型转换完成后,会在当前文件夹多出`ppocr_det_server_2.0_serving` 和`ppocr_det_server_2.0_client`的文件夹,具备如下格式:

+```

+|- ppocr_det_server_2.0_serving/

+ |- __model__

+ |- __params__

+ |- serving_server_conf.prototxt

+ |- serving_server_conf.stream.prototxt

+

+|- ppocr_det_server_2.0_client

+ |- serving_client_conf.prototxt

+ |- serving_client_conf.stream.prototxt

+

+```

+识别模型同理。

+

+

+## Paddle Serving pipeline部署

+

+1. 下载PaddleOCR代码,若已下载可跳过此步骤

+ ```

+ git clone https://github.com/PaddlePaddle/PaddleOCR

+

+ # 进入到工作目录

+ cd PaddleOCR/deploy/pdserver/

+ ```

+ pdserver目录包含启动pipeline服务和发送预测请求的代码,包括:

+ ```

+ __init__.py

+ config.yml # 启动服务的配置文件

+ ocr_reader.py # OCR模型预处理和后处理的代码实现

+ pipeline_http_client.py # 发送pipeline预测请求的脚本

+ web_service.py # 启动pipeline服务端的脚本

+ ```

+

+2. 启动服务可运行如下命令:

+ ```

+ # 启动服务,运行日志保存在log.txt

+ python3 web_service.py &>log.txt &

+ ```

+ 成功启动服务后,log.txt中会打印类似如下日志

+

+

+3. 发送服务请求:

+ ```

+ python3 pipeline_http_client.py

+ ```

+ 成功运行后,模型预测的结果会打印在cmd窗口中,结果示例为:

+

+

+

+

+## FAQ

+**Q1**: 发送请求后没有结果返回或者提示输出解码报错

+

+**A1**: 启动服务和发送请求时不要设置代理,可以在启动服务前和发送请求前关闭代理,关闭代理的命令是:

+```

+unset https_proxy

+unset http_proxy

+```

diff --git a/deploy/pdserving/__init__.py b/deploy/pdserving/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..185a92b8d94d3426d616c0624f0f2ee04339349e

--- /dev/null

+++ b/deploy/pdserving/__init__.py

@@ -0,0 +1,13 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

diff --git a/deploy/pdserving/config.yml b/deploy/pdserving/config.yml

new file mode 100644

index 0000000000000000000000000000000000000000..aef735dbfab5b314f9209a7cc91e7fd5b6fc615c

--- /dev/null

+++ b/deploy/pdserving/config.yml

@@ -0,0 +1,71 @@

+#rpc端口, rpc_port和http_port不允许同时为空。当rpc_port为空且http_port不为空时,会自动将rpc_port设置为http_port+1

+rpc_port: 18090

+

+#http端口, rpc_port和http_port不允许同时为空。当rpc_port可用且http_port为空时,不自动生成http_port

+http_port: 9999

+

+#worker_num, 最大并发数。当build_dag_each_worker=True时, 框架会创建worker_num个进程,每个进程内构建grpcSever和DAG

+##当build_dag_each_worker=False时,框架会设置主线程grpc线程池的max_workers=worker_num

+worker_num: 20

+

+#build_dag_each_worker, False,框架在进程内创建一条DAG;True,框架会每个进程内创建多个独立的DAG

+build_dag_each_worker: false

+

+dag:

+ #op资源类型, True, 为线程模型;False,为进程模型

+ is_thread_op: False

+

+ #重试次数

+ retry: 1

+

+ #使用性能分析, True,生成Timeline性能数据,对性能有一定影响;False为不使用

+ use_profile: False

+

+ tracer:

+ interval_s: 10

+op:

+ det:

+ #并发数,is_thread_op=True时,为线程并发;否则为进程并发

+ concurrency: 4

+

+ #当op配置没有server_endpoints时,从local_service_conf读取本地服务配置

+ local_service_conf:

+ #client类型,包括brpc, grpc和local_predictor.local_predictor不启动Serving服务,进程内预测

+ client_type: local_predictor

+

+ #det模型路径

+ model_config: /paddle/serving/models/det_serving_server/ #ocr_det_model

+

+ #Fetch结果列表,以client_config中fetch_var的alias_name为准

+ fetch_list: ["save_infer_model/scale_0.tmp_1"]

+

+ #计算硬件ID,当devices为""或不写时为CPU预测;当devices为"0", "0,1,2"时为GPU预测,表示使用的GPU卡

+ devices: "2"

+

+ ir_optim: True

+ rec:

+ #并发数,is_thread_op=True时,为线程并发;否则为进程并发

+ concurrency: 1

+

+ #超时时间, 单位ms

+ timeout: -1

+

+ #Serving交互重试次数,默认不重试

+ retry: 1

+

+ #当op配置没有server_endpoints时,从local_service_conf读取本地服务配置

+ local_service_conf:

+

+ #client类型,包括brpc, grpc和local_predictor。local_predictor不启动Serving服务,进程内预测

+ client_type: local_predictor

+

+ #rec模型路径

+ model_config: /paddle/serving/models/rec_serving_server/ #ocr_rec_model

+

+ #Fetch结果列表,以client_config中fetch_var的alias_name为准

+ fetch_list: ["save_infer_model/scale_0.tmp_1"] #["ctc_greedy_decoder_0.tmp_0", "softmax_0.tmp_0"]

+

+ #计算硬件ID,当devices为""或不写时为CPU预测;当devices为"0", "0,1,2"时为GPU预测,表示使用的GPU卡

+ devices: "2"

+

+ ir_optim: True

diff --git a/deploy/imgs/cpp_infer_pred_12.png b/deploy/pdserving/imgs/cpp_infer_pred_12.png

similarity index 100%

rename from deploy/imgs/cpp_infer_pred_12.png

rename to deploy/pdserving/imgs/cpp_infer_pred_12.png

diff --git a/deploy/imgs/demo.png b/deploy/pdserving/imgs/demo.png

similarity index 100%

rename from deploy/imgs/demo.png

rename to deploy/pdserving/imgs/demo.png

diff --git a/deploy/pdserving/imgs/results.png b/deploy/pdserving/imgs/results.png

new file mode 100644

index 0000000000000000000000000000000000000000..35322bf9462c859bb2d158dd1d50ab52f35bad41

Binary files /dev/null and b/deploy/pdserving/imgs/results.png differ

diff --git a/deploy/pdserving/imgs/start_server.png b/deploy/pdserving/imgs/start_server.png

new file mode 100644

index 0000000000000000000000000000000000000000..60e19ccaed6bd4382f5342101a54d8957879bd60

Binary files /dev/null and b/deploy/pdserving/imgs/start_server.png differ

diff --git a/deploy/pdserving/ocr_reader.py b/deploy/pdserving/ocr_reader.py

new file mode 100644

index 0000000000000000000000000000000000000000..95110706af13662de11ef0f668558d0dd3abcf52

--- /dev/null

+++ b/deploy/pdserving/ocr_reader.py

@@ -0,0 +1,438 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import cv2

+import copy

+import numpy as np

+import math

+import re

+import sys

+import argparse

+import string

+from copy import deepcopy

+import paddle

+

+

+class DetResizeForTest(object):

+ def __init__(self, **kwargs):

+ super(DetResizeForTest, self).__init__()

+ self.resize_type = 0

+ if 'image_shape' in kwargs:

+ self.image_shape = kwargs['image_shape']

+ self.resize_type = 1

+ elif 'limit_side_len' in kwargs:

+ self.limit_side_len = kwargs['limit_side_len']

+ self.limit_type = kwargs.get('limit_type', 'min')

+ elif 'resize_long' in kwargs:

+ self.resize_type = 2

+ self.resize_long = kwargs.get('resize_long', 960)

+ else:

+ self.limit_side_len = 736

+ self.limit_type = 'min'

+

+ def __call__(self, data):

+ img = deepcopy(data)

+ src_h, src_w, _ = img.shape

+

+ if self.resize_type == 0:

+ img, [ratio_h, ratio_w] = self.resize_image_type0(img)

+ elif self.resize_type == 2:

+ img, [ratio_h, ratio_w] = self.resize_image_type2(img)

+ else:

+ img, [ratio_h, ratio_w] = self.resize_image_type1(img)

+

+ return img

+

+ def resize_image_type1(self, img):

+ resize_h, resize_w = self.image_shape

+ ori_h, ori_w = img.shape[:2] # (h, w, c)

+ ratio_h = float(resize_h) / ori_h

+ ratio_w = float(resize_w) / ori_w

+ img = cv2.resize(img, (int(resize_w), int(resize_h)))

+ return img, [ratio_h, ratio_w]

+

+ def resize_image_type0(self, img):

+ """

+ resize image to a size multiple of 32 which is required by the network

+ args:

+ img(array): array with shape [h, w, c]

+ return(tuple):

+ img, (ratio_h, ratio_w)

+ """

+ limit_side_len = self.limit_side_len

+ h, w, _ = img.shape

+

+ # limit the max side

+ if self.limit_type == 'max':

+ if max(h, w) > limit_side_len:

+ if h > w:

+ ratio = float(limit_side_len) / h

+ else:

+ ratio = float(limit_side_len) / w

+ else:

+ ratio = 1.

+ else:

+ if min(h, w) < limit_side_len:

+ if h < w:

+ ratio = float(limit_side_len) / h

+ else:

+ ratio = float(limit_side_len) / w

+ else:

+ ratio = 1.

+ resize_h = int(h * ratio)

+ resize_w = int(w * ratio)

+

+ resize_h = int(round(resize_h / 32) * 32)

+ resize_w = int(round(resize_w / 32) * 32)

+

+ try:

+ if int(resize_w) <= 0 or int(resize_h) <= 0:

+ return None, (None, None)

+ img = cv2.resize(img, (int(resize_w), int(resize_h)))

+ except:

+ print(img.shape, resize_w, resize_h)

+ sys.exit(0)

+ ratio_h = resize_h / float(h)

+ ratio_w = resize_w / float(w)

+ # return img, np.array([h, w])

+ return img, [ratio_h, ratio_w]

+

+ def resize_image_type2(self, img):

+ h, w, _ = img.shape

+

+ resize_w = w

+ resize_h = h

+

+ # Fix the longer side

+ if resize_h > resize_w:

+ ratio = float(self.resize_long) / resize_h

+ else:

+ ratio = float(self.resize_long) / resize_w

+

+ resize_h = int(resize_h * ratio)

+ resize_w = int(resize_w * ratio)

+

+ max_stride = 128

+ resize_h = (resize_h + max_stride - 1) // max_stride * max_stride

+ resize_w = (resize_w + max_stride - 1) // max_stride * max_stride

+ img = cv2.resize(img, (int(resize_w), int(resize_h)))

+ ratio_h = resize_h / float(h)

+ ratio_w = resize_w / float(w)

+

+ return img, [ratio_h, ratio_w]

+

+

+class BaseRecLabelDecode(object):

+ """ Convert between text-label and text-index """

+

+ def __init__(self, config):

+ support_character_type = [

+ 'ch', 'en', 'EN_symbol', 'french', 'german', 'japan', 'korean',

+ 'it', 'xi', 'pu', 'ru', 'ar', 'ta', 'ug', 'fa', 'ur', 'rs', 'oc',

+ 'rsc', 'bg', 'uk', 'be', 'te', 'ka', 'chinese_cht', 'hi', 'mr',

+ 'ne', 'EN'

+ ]

+ character_type = config['character_type']

+ character_dict_path = config['character_dict_path']

+ use_space_char = True

+ assert character_type in support_character_type, "Only {} are supported now but get {}".format(

+ support_character_type, character_type)

+

+ self.beg_str = "sos"

+ self.end_str = "eos"

+

+ if character_type == "en":

+ self.character_str = "0123456789abcdefghijklmnopqrstuvwxyz"

+ dict_character = list(self.character_str)

+ elif character_type == "EN_symbol":

+ # same with ASTER setting (use 94 char).

+ self.character_str = string.printable[:-6]

+ dict_character = list(self.character_str)

+ elif character_type in support_character_type:

+ self.character_str = ""

+ assert character_dict_path is not None, "character_dict_path should not be None when character_type is {}".format(

+ character_type)

+ with open(character_dict_path, "rb") as fin:

+ lines = fin.readlines()

+ for line in lines:

+ line = line.decode('utf-8').strip("\n").strip("\r\n")

+ self.character_str += line

+ if use_space_char:

+ self.character_str += " "

+ dict_character = list(self.character_str)

+

+ else:

+ raise NotImplementedError

+ self.character_type = character_type

+ dict_character = self.add_special_char(dict_character)

+ self.dict = {}

+ for i, char in enumerate(dict_character):

+ self.dict[char] = i

+ self.character = dict_character

+

+ def add_special_char(self, dict_character):

+ return dict_character

+

+ def decode(self, text_index, text_prob=None, is_remove_duplicate=False):

+ """ convert text-index into text-label. """

+ result_list = []

+ ignored_tokens = self.get_ignored_tokens()

+ batch_size = len(text_index)

+ for batch_idx in range(batch_size):

+ char_list = []

+ conf_list = []

+ for idx in range(len(text_index[batch_idx])):

+ if text_index[batch_idx][idx] in ignored_tokens:

+ continue

+ if is_remove_duplicate:

+ # only for predict

+ if idx > 0 and text_index[batch_idx][idx - 1] == text_index[

+ batch_idx][idx]:

+ continue

+ char_list.append(self.character[int(text_index[batch_idx][

+ idx])])

+ if text_prob is not None:

+ conf_list.append(text_prob[batch_idx][idx])

+ else:

+ conf_list.append(1)

+ text = ''.join(char_list)

+ result_list.append((text, np.mean(conf_list)))

+ return result_list

+

+ def get_ignored_tokens(self):

+ return [0] # for ctc blank

+

+

+class CTCLabelDecode(BaseRecLabelDecode):

+ """ Convert between text-label and text-index """

+

+ def __init__(

+ self,

+ config,

+ #character_dict_path=None,

+ #character_type='ch',

+ #use_space_char=False,

+ **kwargs):

+ super(CTCLabelDecode, self).__init__(config)

+

+ def __call__(self, preds, label=None, *args, **kwargs):

+ if isinstance(preds, paddle.Tensor):

+ preds = preds.numpy()

+ preds_idx = preds.argmax(axis=2)

+ preds_prob = preds.max(axis=2)

+ text = self.decode(preds_idx, preds_prob, is_remove_duplicate=True)

+ if label is None:

+ return text

+ label = self.decode(label)

+ return text, label

+

+ def add_special_char(self, dict_character):

+ dict_character = ['blank'] + dict_character

+ return dict_character

+

+

+class CharacterOps(object):

+ """ Convert between text-label and text-index """

+

+ def __init__(self, config):

+ self.character_type = config['character_type']

+ self.loss_type = config['loss_type']

+ if self.character_type == "en":

+ self.character_str = "0123456789abcdefghijklmnopqrstuvwxyz"

+ dict_character = list(self.character_str)

+ elif self.character_type == "ch":

+ character_dict_path = config['character_dict_path']

+ self.character_str = ""

+ with open(character_dict_path, "rb") as fin:

+ lines = fin.readlines()

+ for line in lines:

+ line = line.decode('utf-8').strip("\n").strip("\r\n")

+ self.character_str += line

+ dict_character = list(self.character_str)

+ elif self.character_type == "en_sensitive":

+ # same with ASTER setting (use 94 char).

+ self.character_str = string.printable[:-6]

+ dict_character = list(self.character_str)

+ else:

+ self.character_str = None

+ assert self.character_str is not None, \

+ "Nonsupport type of the character: {}".format(self.character_str)

+ self.beg_str = "sos"

+ self.end_str = "eos"

+ if self.loss_type == "attention":

+ dict_character = [self.beg_str, self.end_str] + dict_character

+ self.dict = {}

+ for i, char in enumerate(dict_character):

+ self.dict[char] = i

+ self.character = dict_character

+

+ def encode(self, text):

+ """convert text-label into text-index.

+ input:

+ text: text labels of each image. [batch_size]

+

+ output:

+ text: concatenated text index for CTCLoss.

+ [sum(text_lengths)] = [text_index_0 + text_index_1 + ... + text_index_(n - 1)]

+ length: length of each text. [batch_size]

+ """

+ if self.character_type == "en":

+ text = text.lower()

+

+ text_list = []

+ for char in text:

+ if char not in self.dict:

+ continue

+ text_list.append(self.dict[char])

+ text = np.array(text_list)

+ return text

+

+ def decode(self, text_index, is_remove_duplicate=False):

+ """ convert text-index into text-label. """

+ char_list = []

+ char_num = self.get_char_num()

+

+ if self.loss_type == "attention":

+ beg_idx = self.get_beg_end_flag_idx("beg")

+ end_idx = self.get_beg_end_flag_idx("end")

+ ignored_tokens = [beg_idx, end_idx]

+ else:

+ ignored_tokens = [char_num]

+

+ for idx in range(len(text_index)):

+ if text_index[idx] in ignored_tokens:

+ continue

+ if is_remove_duplicate:

+ if idx > 0 and text_index[idx - 1] == text_index[idx]:

+ continue

+ char_list.append(self.character[text_index[idx]])

+ text = ''.join(char_list)

+ return text

+

+ def get_char_num(self):

+ return len(self.character)

+

+ def get_beg_end_flag_idx(self, beg_or_end):

+ if self.loss_type == "attention":

+ if beg_or_end == "beg":

+ idx = np.array(self.dict[self.beg_str])

+ elif beg_or_end == "end":

+ idx = np.array(self.dict[self.end_str])

+ else:

+ assert False, "Unsupport type %s in get_beg_end_flag_idx"\

+ % beg_or_end

+ return idx

+ else:

+ err = "error in get_beg_end_flag_idx when using the loss %s"\

+ % (self.loss_type)

+ assert False, err

+

+

+class OCRReader(object):

+ def __init__(self,

+ algorithm="CRNN",

+ image_shape=[3, 32, 320],

+ char_type="ch",

+ batch_num=1,

+ char_dict_path="./ppocr_keys_v1.txt"):

+ self.rec_image_shape = image_shape

+ self.character_type = char_type

+ self.rec_batch_num = batch_num

+ char_ops_params = {}

+ char_ops_params["character_type"] = char_type

+ char_ops_params["character_dict_path"] = char_dict_path

+ char_ops_params['loss_type'] = 'ctc'

+ self.char_ops = CharacterOps(char_ops_params)

+ self.label_ops = CTCLabelDecode(char_ops_params)

+

+ def resize_norm_img(self, img, max_wh_ratio):

+ imgC, imgH, imgW = self.rec_image_shape

+ if self.character_type == "ch":

+ imgW = int(32 * max_wh_ratio)

+ h = img.shape[0]

+ w = img.shape[1]

+ ratio = w / float(h)

+ if math.ceil(imgH * ratio) > imgW:

+ resized_w = imgW

+ else:

+ resized_w = int(math.ceil(imgH * ratio))

+ resized_image = cv2.resize(img, (resized_w, imgH))

+ resized_image = resized_image.astype('float32')

+ resized_image = resized_image.transpose((2, 0, 1)) / 255

+ resized_image -= 0.5

+ resized_image /= 0.5

+ padding_im = np.zeros((imgC, imgH, imgW), dtype=np.float32)

+

+ padding_im[:, :, 0:resized_w] = resized_image

+ return padding_im

+

+ def preprocess(self, img_list):

+ img_num = len(img_list)

+ norm_img_batch = []

+ max_wh_ratio = 0

+ for ino in range(img_num):

+ h, w = img_list[ino].shape[0:2]

+ wh_ratio = w * 1.0 / h

+ max_wh_ratio = max(max_wh_ratio, wh_ratio)

+

+ for ino in range(img_num):

+ norm_img = self.resize_norm_img(img_list[ino], max_wh_ratio)

+ norm_img = norm_img[np.newaxis, :]

+ norm_img_batch.append(norm_img)

+ norm_img_batch = np.concatenate(norm_img_batch)

+ norm_img_batch = norm_img_batch.copy()

+

+ return norm_img_batch[0]

+

+ def postprocess_old(self, outputs, with_score=False):

+ rec_res = []

+ rec_idx_lod = outputs["ctc_greedy_decoder_0.tmp_0.lod"]

+ rec_idx_batch = outputs["ctc_greedy_decoder_0.tmp_0"]

+ if with_score:

+ predict_lod = outputs["softmax_0.tmp_0.lod"]

+ for rno in range(len(rec_idx_lod) - 1):

+ beg = rec_idx_lod[rno]

+ end = rec_idx_lod[rno + 1]

+ if isinstance(rec_idx_batch, list):

+ rec_idx_tmp = [x[0] for x in rec_idx_batch[beg:end]]

+ else: #nd array

+ rec_idx_tmp = rec_idx_batch[beg:end, 0]

+ preds_text = self.char_ops.decode(rec_idx_tmp)

+ if with_score:

+ beg = predict_lod[rno]

+ end = predict_lod[rno + 1]

+ if isinstance(outputs["softmax_0.tmp_0"], list):

+ outputs["softmax_0.tmp_0"] = np.array(outputs[

+ "softmax_0.tmp_0"]).astype(np.float32)

+ probs = outputs["softmax_0.tmp_0"][beg:end, :]

+ ind = np.argmax(probs, axis=1)

+ blank = probs.shape[1]

+ valid_ind = np.where(ind != (blank - 1))[0]

+ score = np.mean(probs[valid_ind, ind[valid_ind]])

+ rec_res.append([preds_text, score])

+ else:

+ rec_res.append([preds_text])

+ return rec_res

+

+ def postprocess(self, outputs, with_score=False):

+ preds = outputs["save_infer_model/scale_0.tmp_1"]

+ try:

+ preds = preds.numpy()

+ except:

+ pass

+ preds_idx = preds.argmax(axis=2)

+ preds_prob = preds.max(axis=2)

+ text = self.label_ops.decode(

+ preds_idx, preds_prob, is_remove_duplicate=True)

+ return text

diff --git a/deploy/pdserving/pipeline_http_client.py b/deploy/pdserving/pipeline_http_client.py

new file mode 100644

index 0000000000000000000000000000000000000000..88c4a81ea8bbed80d37b5fbfea6bf01b38f9613a

--- /dev/null

+++ b/deploy/pdserving/pipeline_http_client.py

@@ -0,0 +1,40 @@

+# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import numpy as np

+import requests

+import json

+import base64

+import os

+

+

+def cv2_to_base64(image):

+ return base64.b64encode(image).decode('utf8')

+

+

+url = "http://127.0.0.1:9999/ocr/prediction"

+test_img_dir = "../doc/imgs/"

+for idx, img_file in enumerate(os.listdir(test_img_dir)):

+ with open(os.path.join(test_img_dir, img_file), 'rb') as file:

+ image_data1 = file.read()

+

+ image = cv2_to_base64(image_data1)

+

+ for i in range(1):

+ data = {"key": ["image"], "value": [image]}

+ r = requests.post(url=url, data=json.dumps(data))

+ print(r.json())

+

+test_img_dir = "../doc/imgs/"

+print("==> total number of test imgs: ", len(os.listdir(test_img_dir)))

diff --git a/deploy/pdserving/pipeline_rpc_client.py b/deploy/pdserving/pipeline_rpc_client.py

new file mode 100644

index 0000000000000000000000000000000000000000..7471f7ed6c1254d550bcf2c19f6ee7c610a2e20e

--- /dev/null

+++ b/deploy/pdserving/pipeline_rpc_client.py

@@ -0,0 +1,42 @@

+# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+try:

+ from paddle_serving_server_gpu.pipeline import PipelineClient

+except ImportError:

+ from paddle_serving_server.pipeline import PipelineClient

+import numpy as np

+import requests

+import json

+import cv2

+import base64

+import os

+

+client = PipelineClient()

+client.connect(['127.0.0.1:18090'])

+

+

+def cv2_to_base64(image):

+ return base64.b64encode(image).decode('utf8')

+

+

+test_img_dir = "imgs/"

+for img_file in os.listdir(test_img_dir):

+ with open(os.path.join(test_img_dir, img_file), 'rb') as file:

+ image_data = file.read()

+ image = cv2_to_base64(image_data)

+

+for i in range(1):

+ ret = client.predict(feed_dict={"image": image}, fetch=["res"])

+ print(ret)

+ #print(ret)

diff --git a/deploy/pdserving/web_service.py b/deploy/pdserving/web_service.py

new file mode 100644

index 0000000000000000000000000000000000000000..b47ef65d09dd7aad0e4d00ca852a5c32161ad45b

--- /dev/null

+++ b/deploy/pdserving/web_service.py

@@ -0,0 +1,127 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+try:

+ from paddle_serving_server_gpu.web_service import WebService, Op

+except ImportError:

+ from paddle_serving_server.web_service import WebService, Op

+

+import logging

+import numpy as np

+import cv2

+import base64

+# from paddle_serving_app.reader import OCRReader

+from ocr_reader import OCRReader, DetResizeForTest

+from paddle_serving_app.reader import Sequential, ResizeByFactor

+from paddle_serving_app.reader import Div, Normalize, Transpose

+from paddle_serving_app.reader import DBPostProcess, FilterBoxes, GetRotateCropImage, SortedBoxes

+

+_LOGGER = logging.getLogger()

+

+

+class DetOp(Op):

+ def init_op(self):

+ self.det_preprocess = Sequential([

+ DetResizeForTest(), Div(255),

+ Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]), Transpose(

+ (2, 0, 1))

+ ])

+ self.filter_func = FilterBoxes(10, 10)

+ self.post_func = DBPostProcess({

+ "thresh": 0.3,

+ "box_thresh": 0.5,

+ "max_candidates": 1000,

+ "unclip_ratio": 1.5,

+ "min_size": 3

+ })

+

+ def preprocess(self, input_dicts, data_id, log_id):

+ (_, input_dict), = input_dicts.items()

+ data = base64.b64decode(input_dict["image"].encode('utf8'))

+ data = np.fromstring(data, np.uint8)

+ # Note: class variables(self.var) can only be used in process op mode

+ im = cv2.imdecode(data, cv2.IMREAD_COLOR)

+ self.im = im

+ self.ori_h, self.ori_w, _ = im.shape

+

+ det_img = self.det_preprocess(self.im)

+ _, self.new_h, self.new_w = det_img.shape

+ print("det image shape", det_img.shape)

+ return {"x": det_img[np.newaxis, :].copy()}, False, None, ""

+

+ def postprocess(self, input_dicts, fetch_dict, log_id):

+ print("input_dicts: ", input_dicts)

+ det_out = fetch_dict["save_infer_model/scale_0.tmp_1"]

+ ratio_list = [

+ float(self.new_h) / self.ori_h, float(self.new_w) / self.ori_w

+ ]

+ dt_boxes_list = self.post_func(det_out, [ratio_list])

+ dt_boxes = self.filter_func(dt_boxes_list[0], [self.ori_h, self.ori_w])

+ out_dict = {"dt_boxes": dt_boxes, "image": self.im}

+

+ print("out dict", out_dict["dt_boxes"])

+ return out_dict, None, ""

+

+

+class RecOp(Op):

+ def init_op(self):

+ self.ocr_reader = OCRReader(

+ char_dict_path="../../ppocr/utils/ppocr_keys_v1.txt")

+

+ self.get_rotate_crop_image = GetRotateCropImage()

+ self.sorted_boxes = SortedBoxes()

+

+ def preprocess(self, input_dicts, data_id, log_id):

+ (_, input_dict), = input_dicts.items()

+ im = input_dict["image"]

+ dt_boxes = input_dict["dt_boxes"]

+ dt_boxes = self.sorted_boxes(dt_boxes)

+ feed_list = []

+ img_list = []

+ max_wh_ratio = 0

+ for i, dtbox in enumerate(dt_boxes):

+ boximg = self.get_rotate_crop_image(im, dt_boxes[i])

+ img_list.append(boximg)

+ h, w = boximg.shape[0:2]

+ wh_ratio = w * 1.0 / h

+ max_wh_ratio = max(max_wh_ratio, wh_ratio)

+ _, w, h = self.ocr_reader.resize_norm_img(img_list[0],

+ max_wh_ratio).shape

+

+ imgs = np.zeros((len(img_list), 3, w, h)).astype('float32')

+ for id, img in enumerate(img_list):

+ norm_img = self.ocr_reader.resize_norm_img(img, max_wh_ratio)

+ imgs[id] = norm_img

+ print("rec image shape", imgs.shape)

+ feed = {"x": imgs.copy()}

+ return feed, False, None, ""

+

+ def postprocess(self, input_dicts, fetch_dict, log_id):

+ rec_res = self.ocr_reader.postprocess(fetch_dict, with_score=True)

+ res_lst = []

+ for res in rec_res:

+ res_lst.append(res[0])

+ res = {"res": str(res_lst)}

+ return res, None, ""

+

+

+class OcrService(WebService):

+ def get_pipeline_response(self, read_op):

+ det_op = DetOp(name="det", input_ops=[read_op])

+ rec_op = RecOp(name="rec", input_ops=[det_op])

+ return rec_op

+

+

+uci_service = OcrService(name="ocr")

+uci_service.prepare_pipeline_config("config.yml")

+uci_service.run_service()

diff --git a/doc/doc_ch/algorithm_overview.md b/doc/doc_ch/algorithm_overview.md

index c8fc280d80056395bbc841a973004b06844b1214..19d7a69c7fb08a8e7fb36c3043aa211de19b9295 100755

--- a/doc/doc_ch/algorithm_overview.md

+++ b/doc/doc_ch/algorithm_overview.md

@@ -28,7 +28,9 @@ PaddleOCR开源的文本检测算法列表:

| --- | --- | --- | --- | --- | --- |

|SAST|ResNet50_vd|89.63%|78.44%|83.66%|[下载链接](https://paddleocr.bj.bcebos.com/dygraph_v2.0/en/det_r50_vd_sast_totaltext_v2.0_train.tar)|

-**说明:** SAST模型训练额外加入了icdar2013、icdar2017、COCO-Text、ArT等公开数据集进行调优。PaddleOCR用到的经过整理格式的英文公开数据集下载:[百度云地址](https://pan.baidu.com/s/12cPnZcVuV1zn5DOd4mqjVw) (提取码: 2bpi)

+**说明:** SAST模型训练额外加入了icdar2013、icdar2017、COCO-Text、ArT等公开数据集进行调优。PaddleOCR用到的经过整理格式的英文公开数据集下载:

+* [百度云地址](https://pan.baidu.com/s/12cPnZcVuV1zn5DOd4mqjVw) (提取码: 2bpi)

+* [Google Drive下载地址](https://drive.google.com/drive/folders/1ll2-XEVyCQLpJjawLDiRlvo_i4BqHCJe?usp=sharing)

PaddleOCR文本检测算法的训练和使用请参考文档教程中[模型训练/评估中的文本检测部分](./detection.md)。

diff --git a/doc/doc_ch/inference.md b/doc/doc_ch/inference.md

index 7968b355ea936d465b3c173c0fcdb3e08f12f16e..f0f7401538a9f8940f671fdcc170aca6c003040d 100755

--- a/doc/doc_ch/inference.md

+++ b/doc/doc_ch/inference.md

@@ -12,7 +12,7 @@ inference 模型(`paddle.jit.save`保存的模型)

- [一、训练模型转inference模型](#训练模型转inference模型)

- [检测模型转inference模型](#检测模型转inference模型)

- [识别模型转inference模型](#识别模型转inference模型)

- - [方向分类模型转inference模型](#方向分类模型转inference模型)

+ - [方向分类模型转inference模型](#方向分类模型转inference模型)

- [二、文本检测模型推理](#文本检测模型推理)

- [1. 超轻量中文检测模型推理](#超轻量中文检测模型推理)

diff --git a/doc/doc_ch/multi_languages.md b/doc/doc_ch/multi_languages.md

new file mode 100644

index 0000000000000000000000000000000000000000..eec09535e7242f62cdebda97cee11086e45c1096

--- /dev/null

+++ b/doc/doc_ch/multi_languages.md

@@ -0,0 +1,313 @@

+# 多语言模型

+

+**近期更新**

+

+- 2021.4.9 支持**80种**语言的检测和识别

+- 2021.4.9 支持**轻量高精度**英文模型检测识别

+

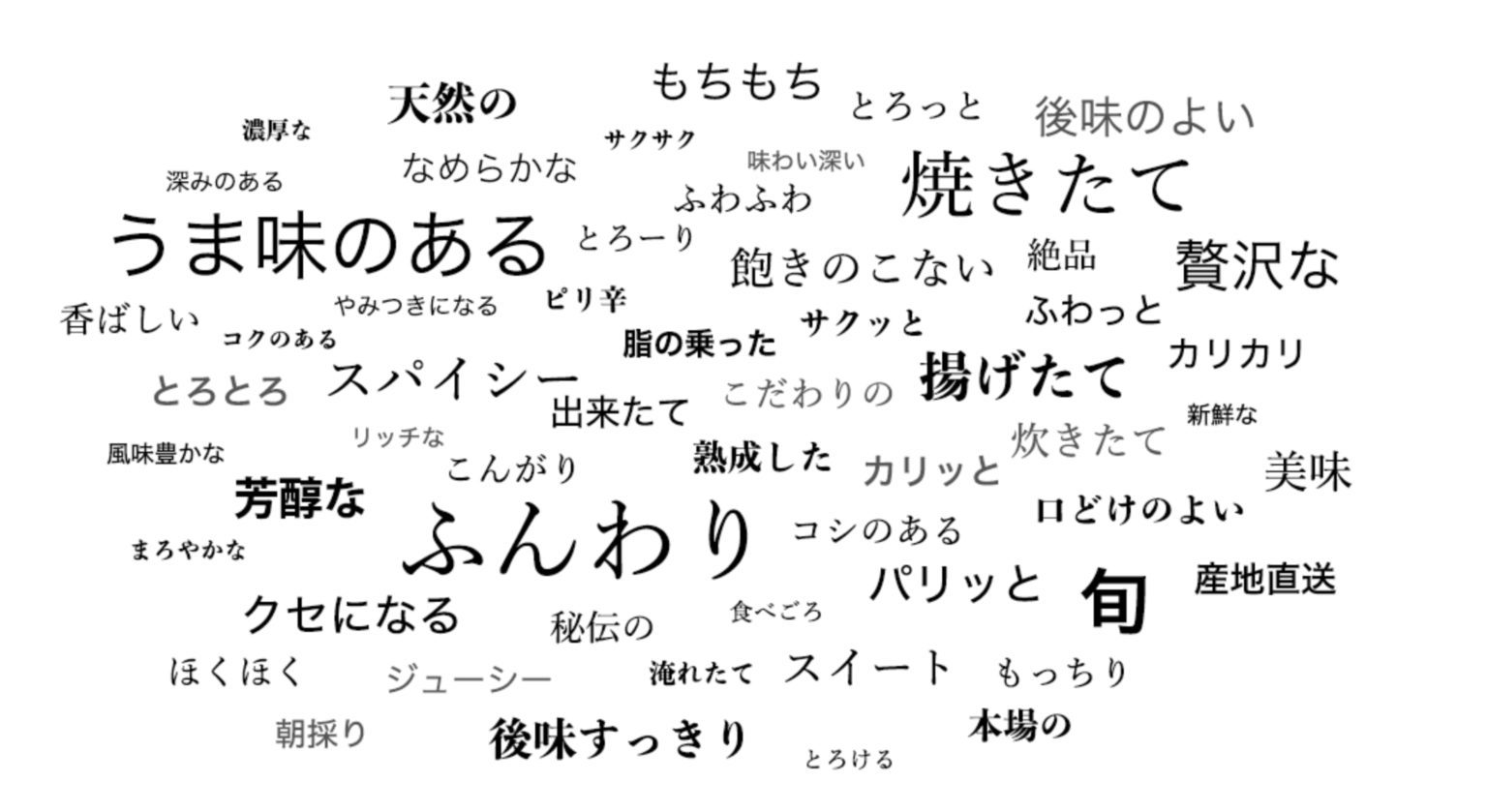

+PaddleOCR 旨在打造一套丰富、领先、且实用的OCR工具库,不仅提供了通用场景下的中英文模型,也提供了专门在英文场景下训练的模型,

+和覆盖[80个语言](#语种缩写)的小语种模型。

+

+其中英文模型支持,大小写字母和常见标点的检测识别,并优化了空格字符的识别:

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ +

+ +

+  +

+ +

+ +

+ +

+ +

+ +

+  +

+