Merge branch 'gru4rec' of https://github.com/123malin/PaddleRec into gru4rec

Showing

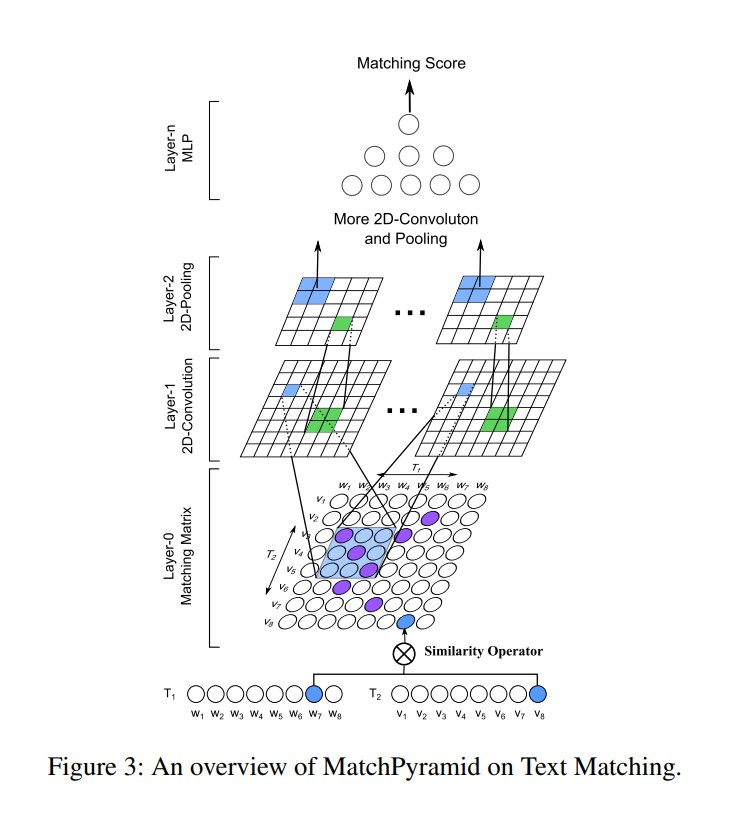

doc/imgs/match-pyramid.png

0 → 100644

219.2 KB

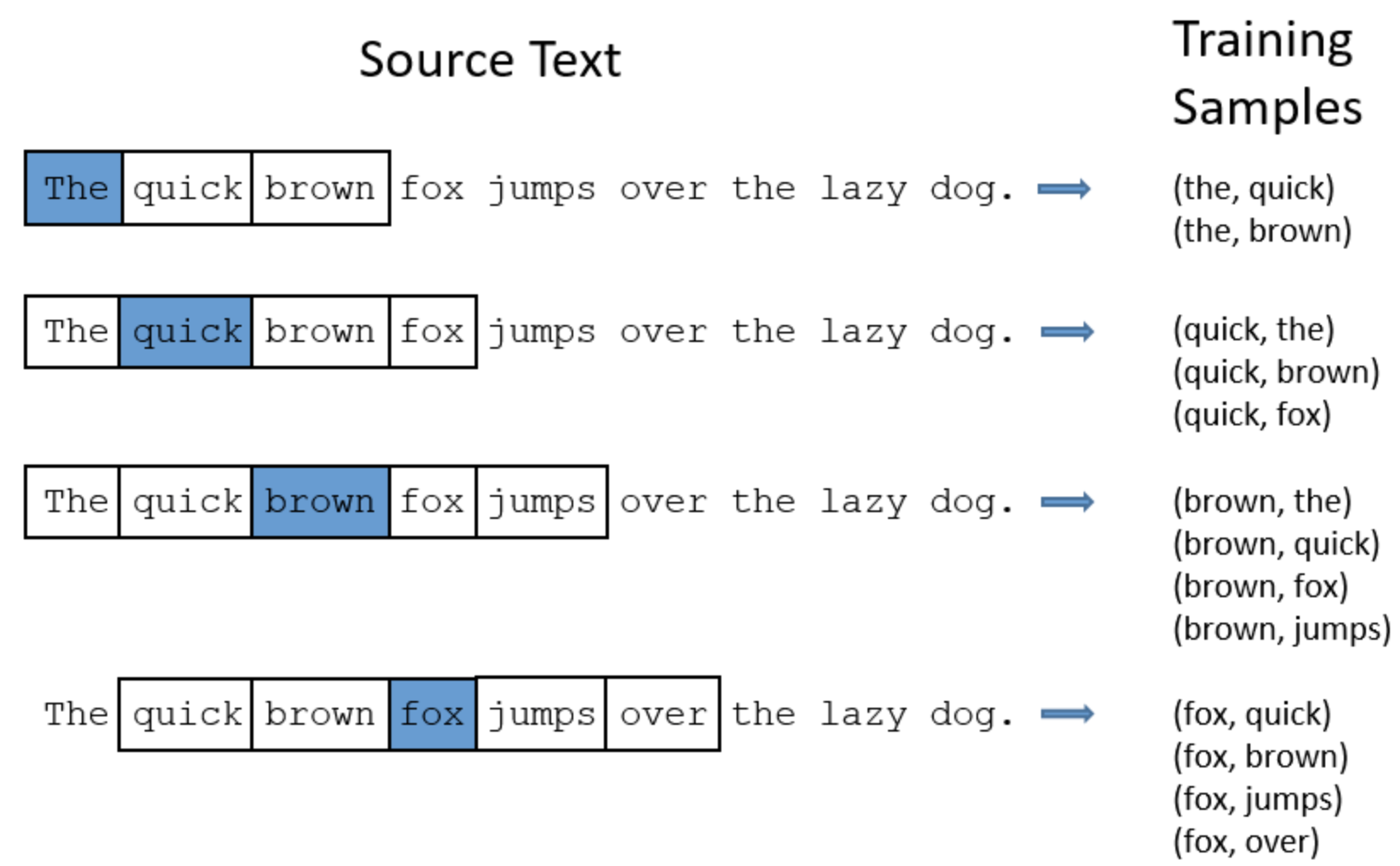

doc/imgs/w2v_train.png

0 → 100644

223.3 KB

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

models/match/dssm/readme.md

0 → 100644

models/match/dssm/run.sh

0 → 100644

models/match/dssm/transform.py

0 → 100644

models/match/multiview-simnet/evaluate_reader.py

100755 → 100644

models/match/multiview-simnet/reader.py

100755 → 100644

models/recall/word2vec/README.md

0 → 100644

models/recall/word2vec/infer.py

0 → 100644

models/recall/word2vec/utils.py

0 → 100644

此差异已折叠。

此差异已折叠。

tools/cal_pos_neg.py

0 → 100644