Merge pull request #122 from overlordmax/pr_6192341

add flen

Showing

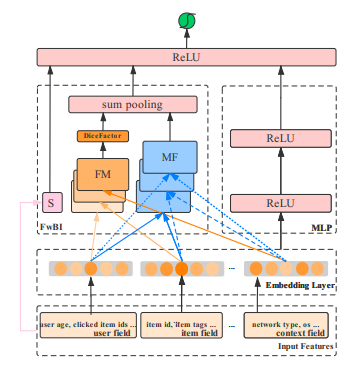

doc/imgs/flen.png

0 → 100644

36.1 KB

models/rank/flen/README.md

0 → 100644

models/rank/flen/__init__.py

0 → 100644

models/rank/flen/config.yaml

0 → 100644

models/rank/flen/data/run.sh

0 → 100644

此差异已折叠。

models/rank/flen/model.py

0 → 100644