Merge branch 'master' into doc_v4

Showing

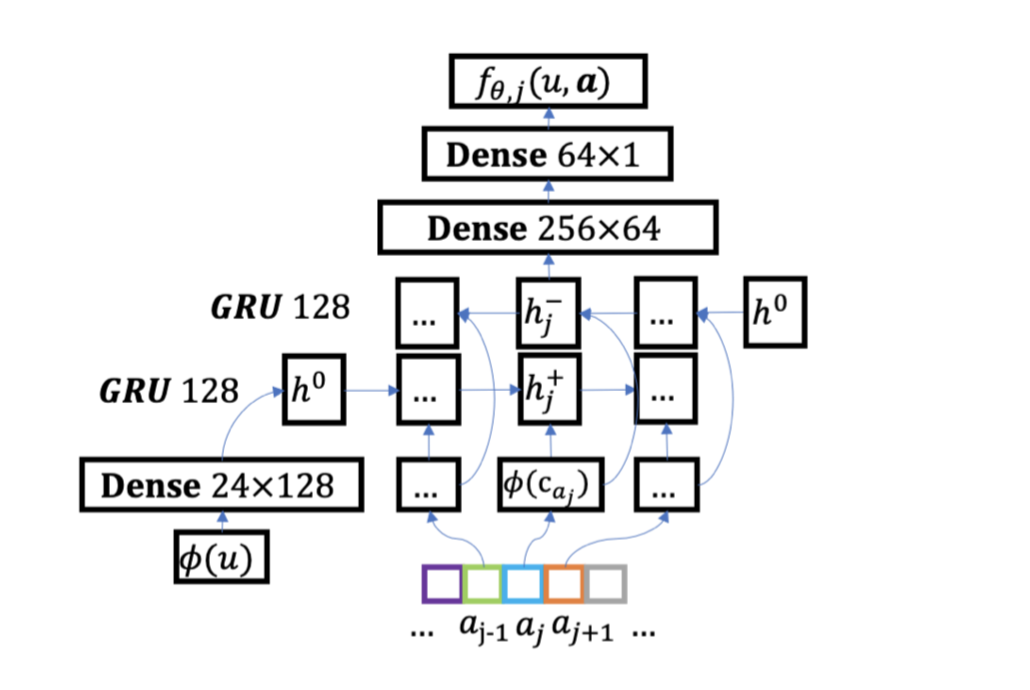

doc/imgs/listwise.png

0 → 100644

133.7 KB

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

文件已添加

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

models/rerank/__init__.py

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

models/rerank/listwise/model.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

models/rerank/readme.md

0 → 100755

此差异已折叠。

此差异已折叠。