Merge branch 'develop' of github.com:baidu/Paddle into feature/refine_doc_drnn

Showing

demo/quick_start/data/README.md

0 → 100644

doc/about/index.rst

0 → 100644

doc/algorithm/rnn/bi_lstm.jpg

已删除

120000 → 0

doc/api/index.rst

0 → 100644

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

doc/cluster/index.rst

已删除

100644 → 0

doc/dev/index.rst

已删除

100644 → 0

doc/dev/layer.md

已删除

100644 → 0

43.4 KB

文件已移动

文件已移动

doc/getstarted/index.rst

0 → 100644

文件已移动

文件已移动

doc/howto/cmd_parameter/index.md

0 → 100644

doc/howto/index.rst

0 → 100644

doc/howto/optimization/index.rst

0 → 100644

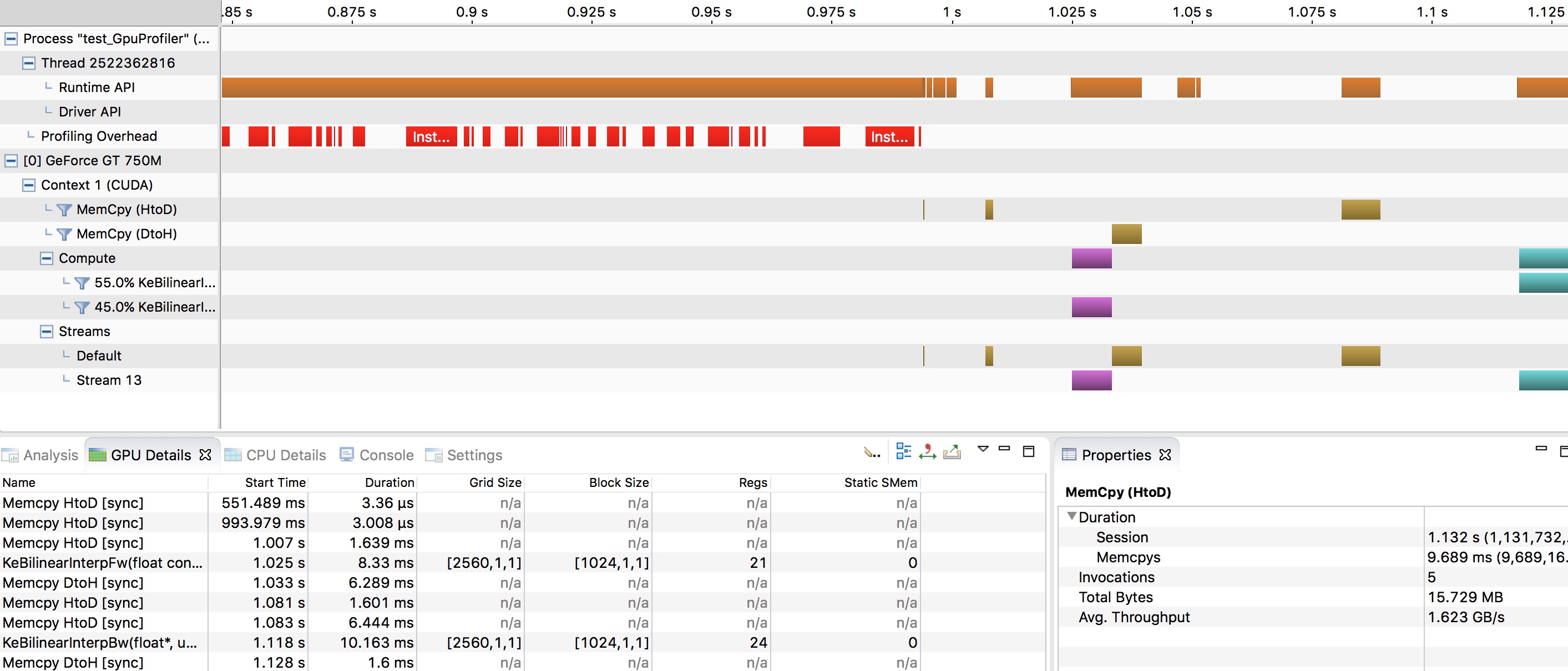

doc/howto/optimization/nvvp1.png

0 → 100644

416.1 KB

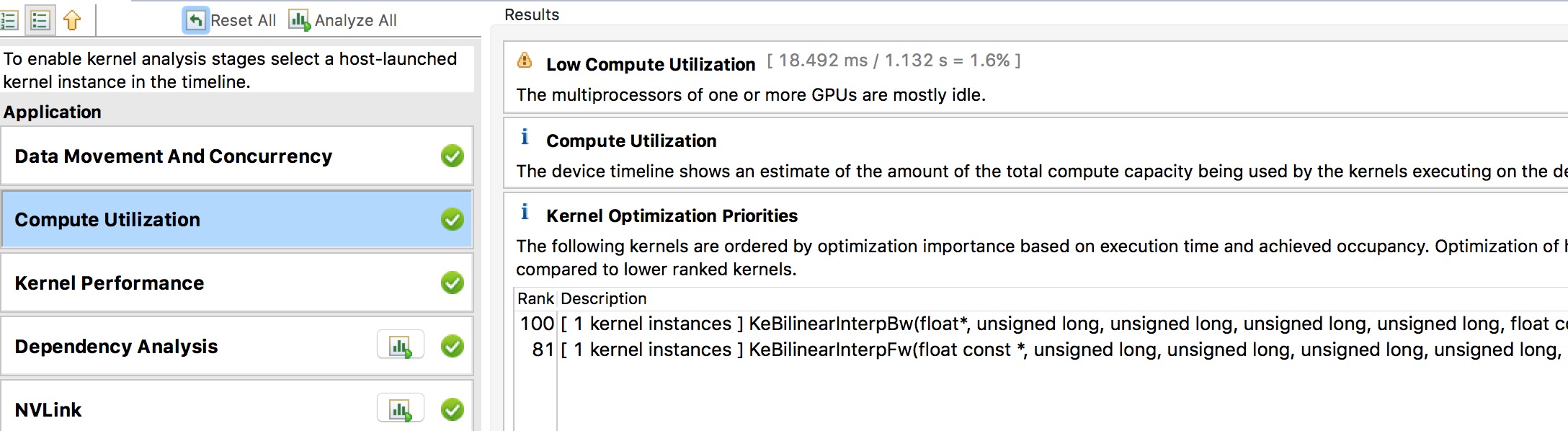

doc/howto/optimization/nvvp2.png

0 → 100644

483.5 KB

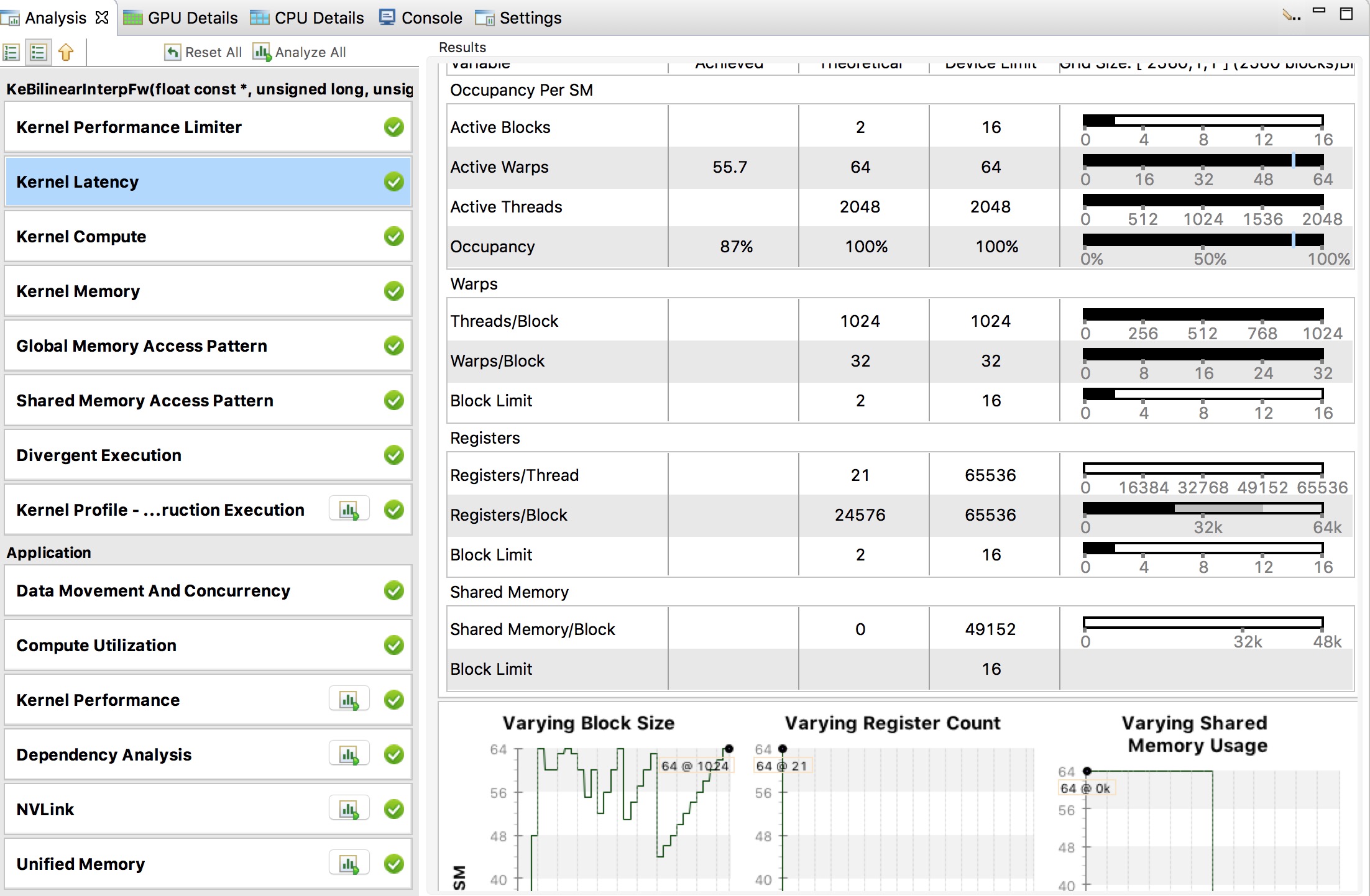

doc/howto/optimization/nvvp3.png

0 → 100644

247.8 KB

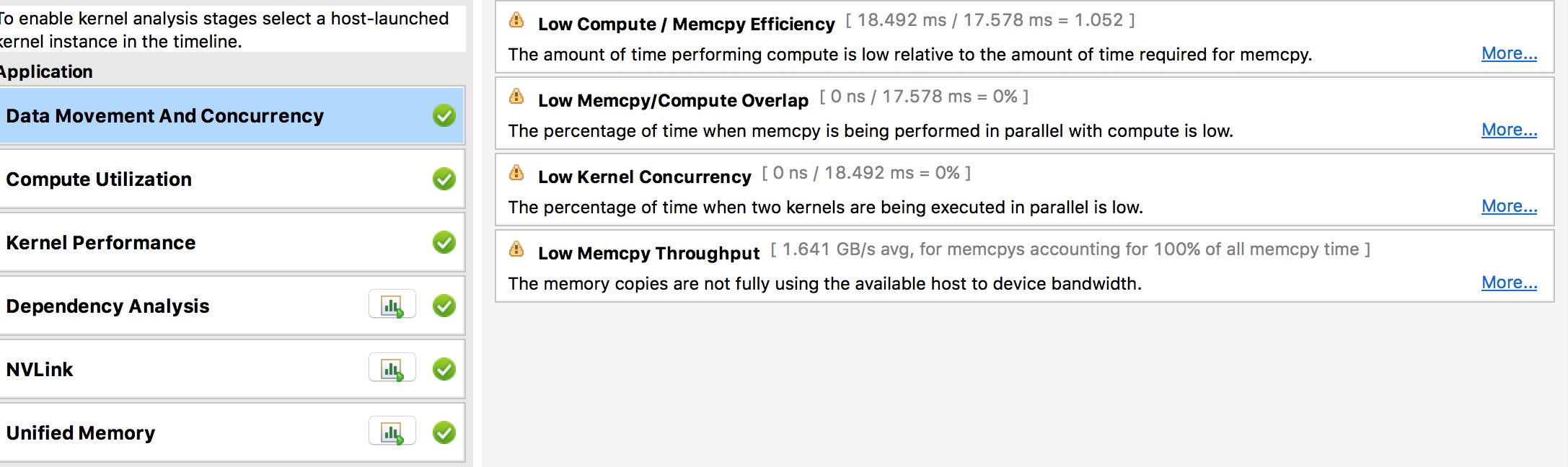

doc/howto/optimization/nvvp4.png

0 → 100644

276.6 KB

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

此差异已折叠。

doc/ui/index.md

已删除

100644 → 0

此差异已折叠。

doc/user_guide.rst

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

doc_cn/cluster/k8s/Dockerfile

0 → 100644

此差异已折叠。

此差异已折叠。

doc_cn/cluster/k8s/job.yaml

0 → 100644

此差异已折叠。

此差异已折叠。

doc_cn/cluster/k8s/start.sh

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

doc_cn/ui/cmd/dump_config.rst

已删除

100644 → 0

doc_cn/ui/cmd/merge_model.rst

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。