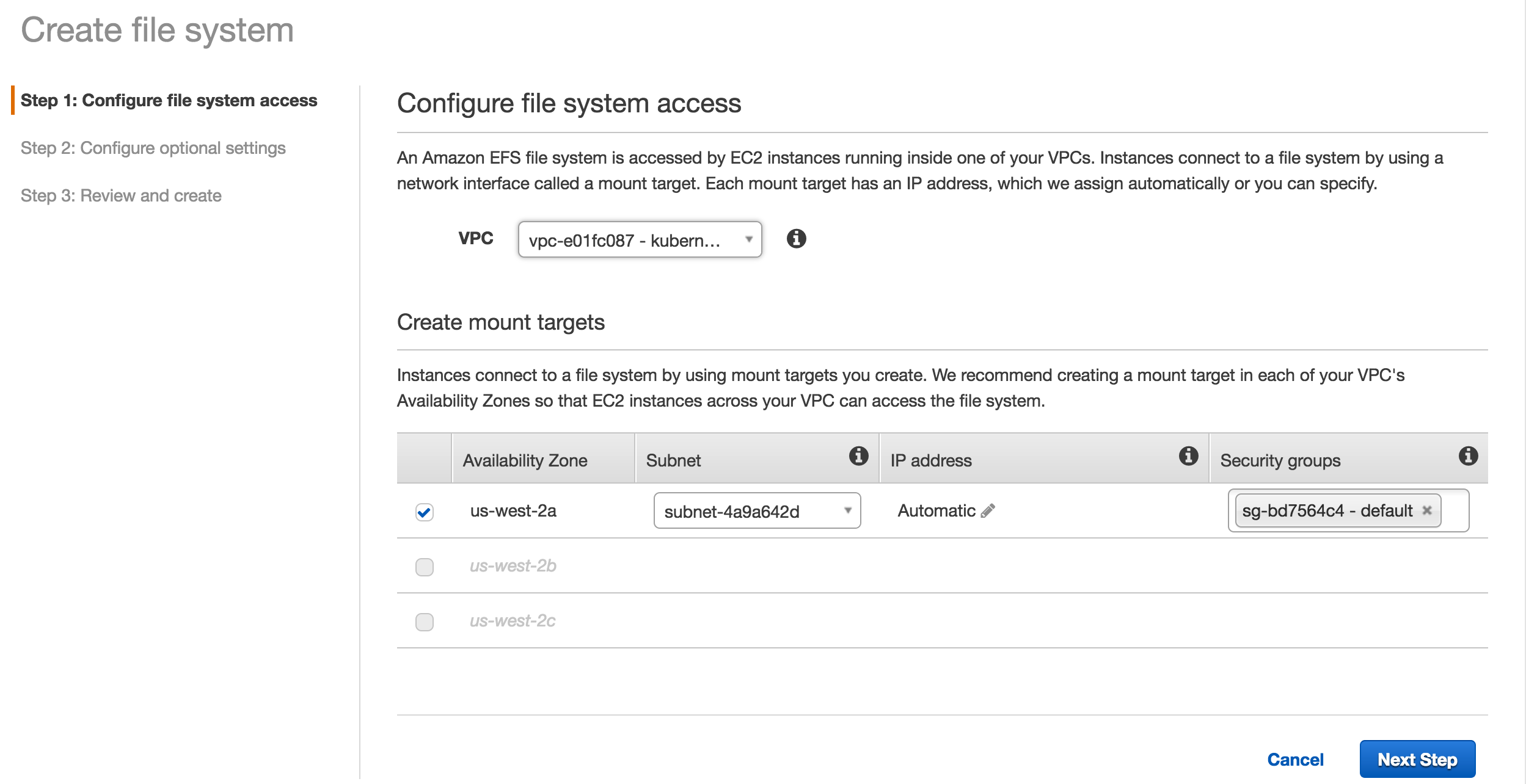

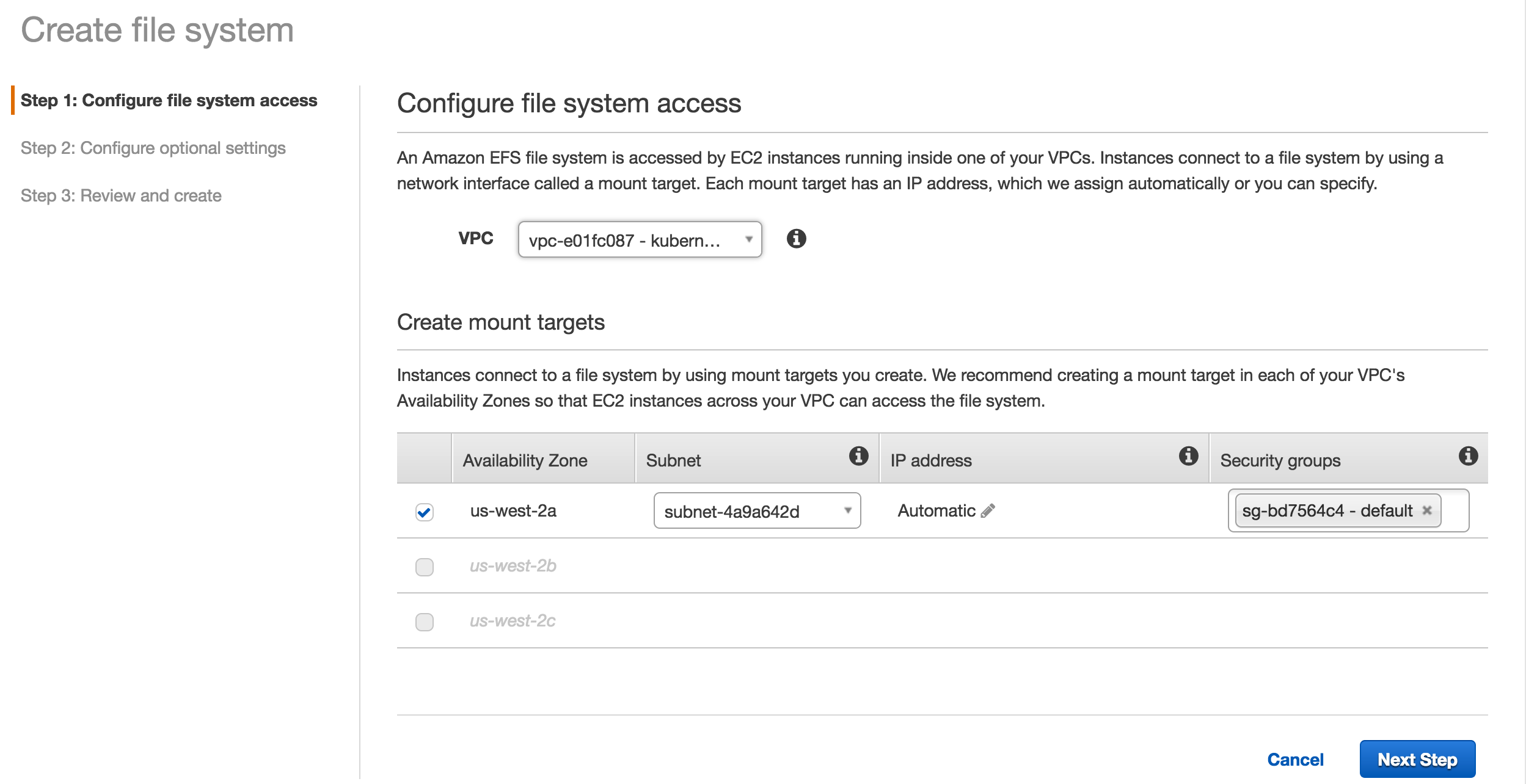

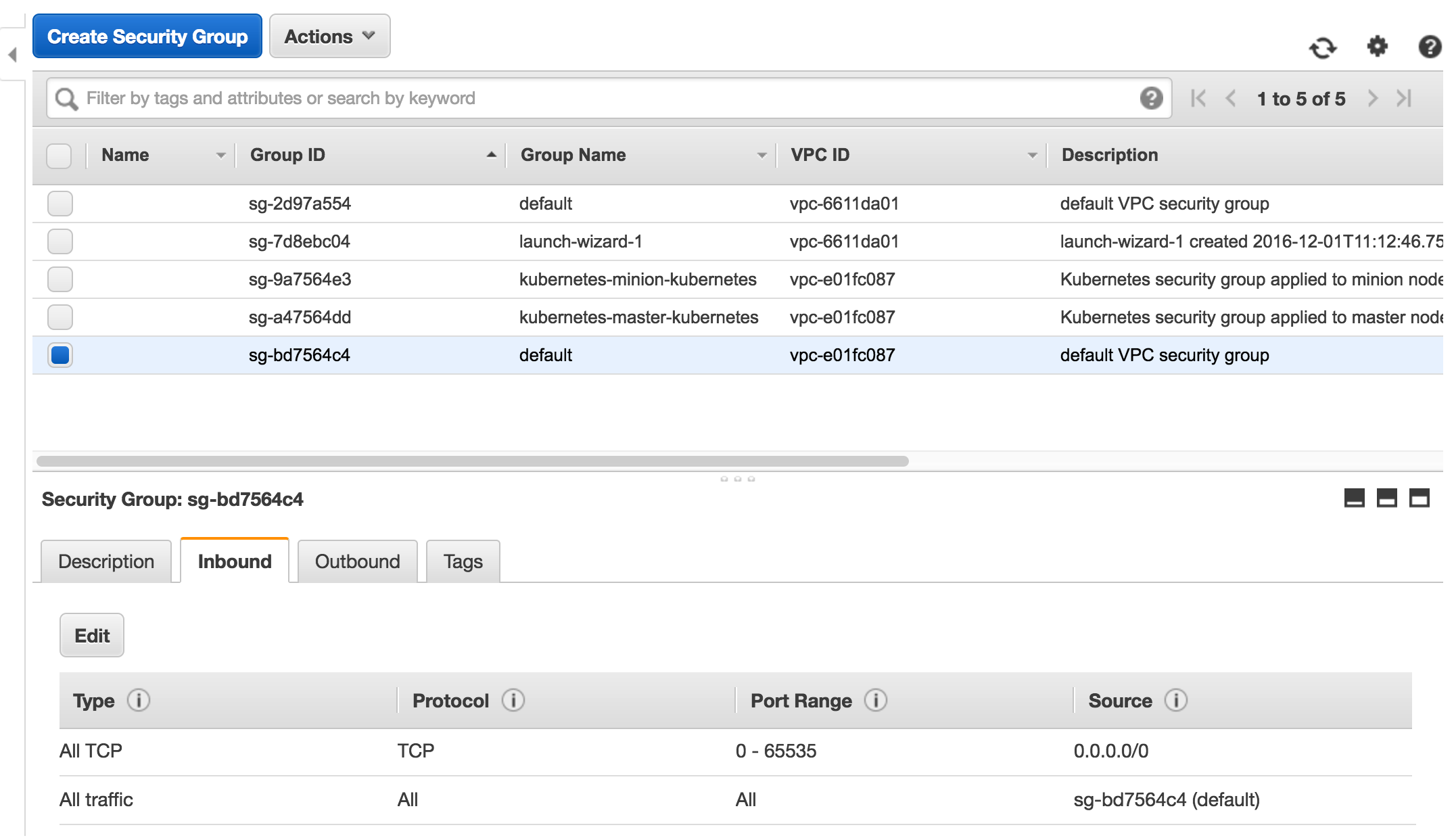

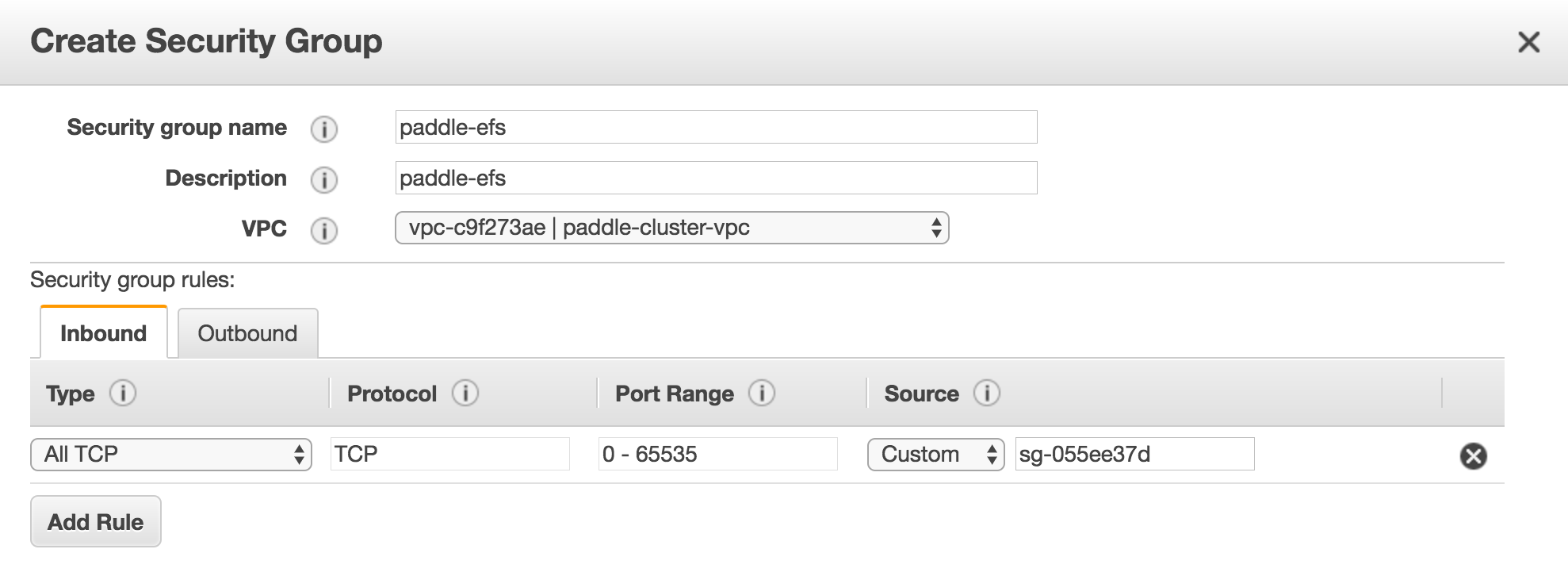

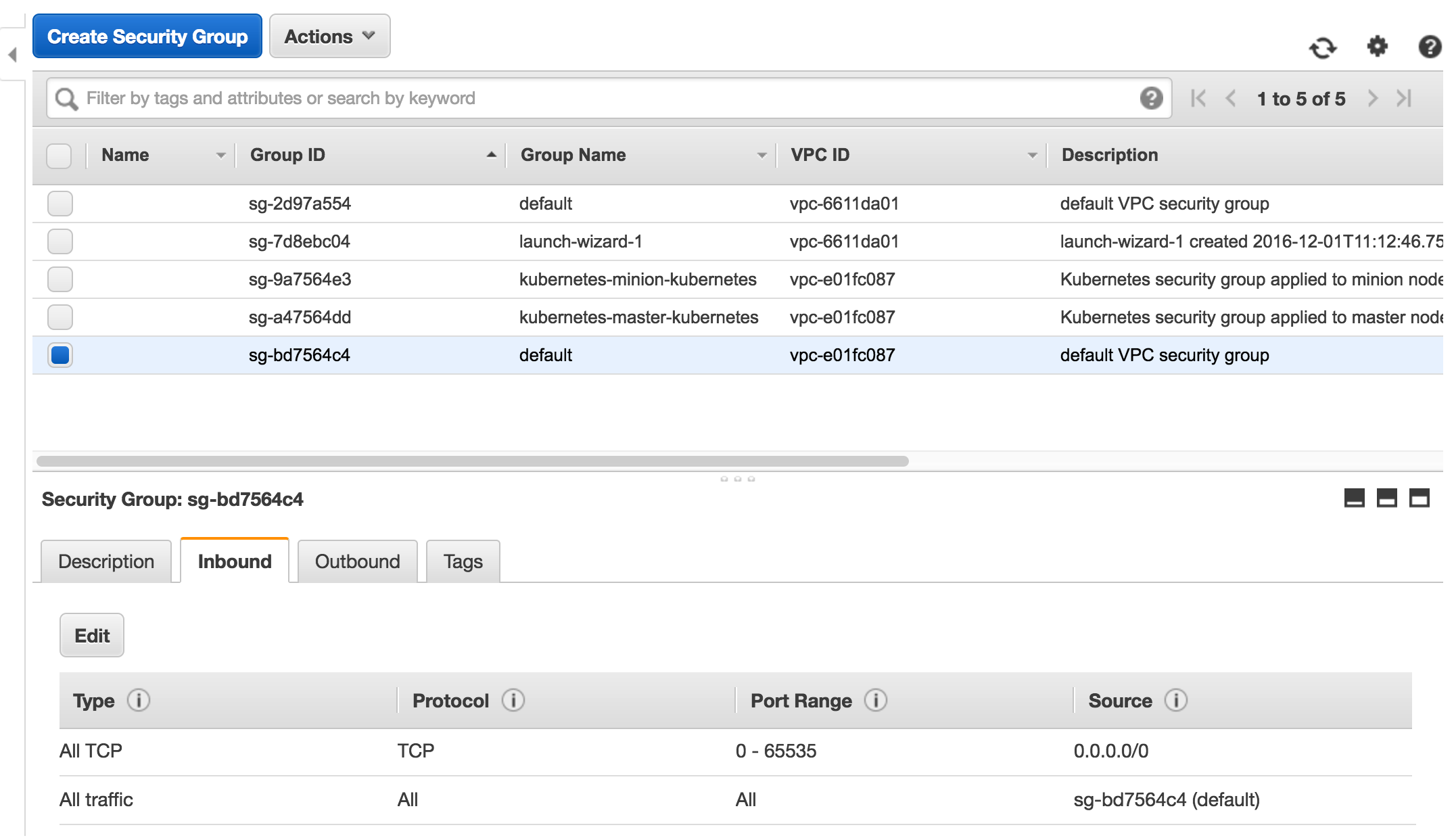

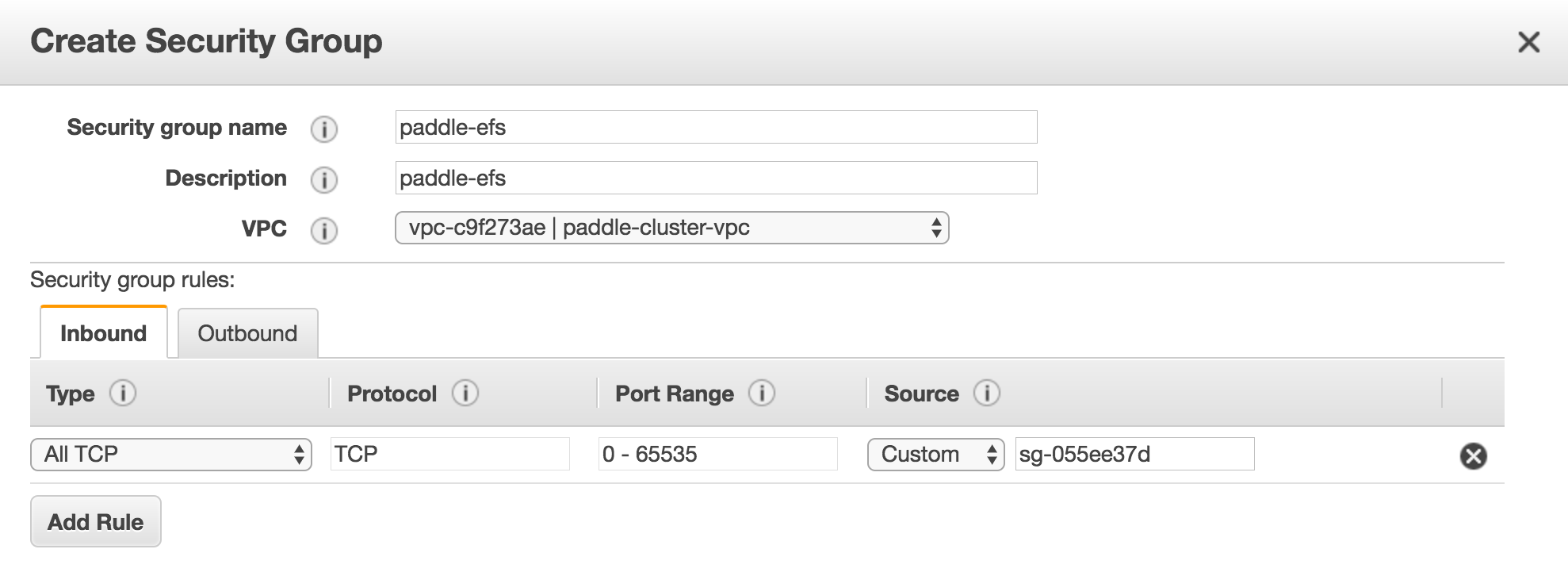

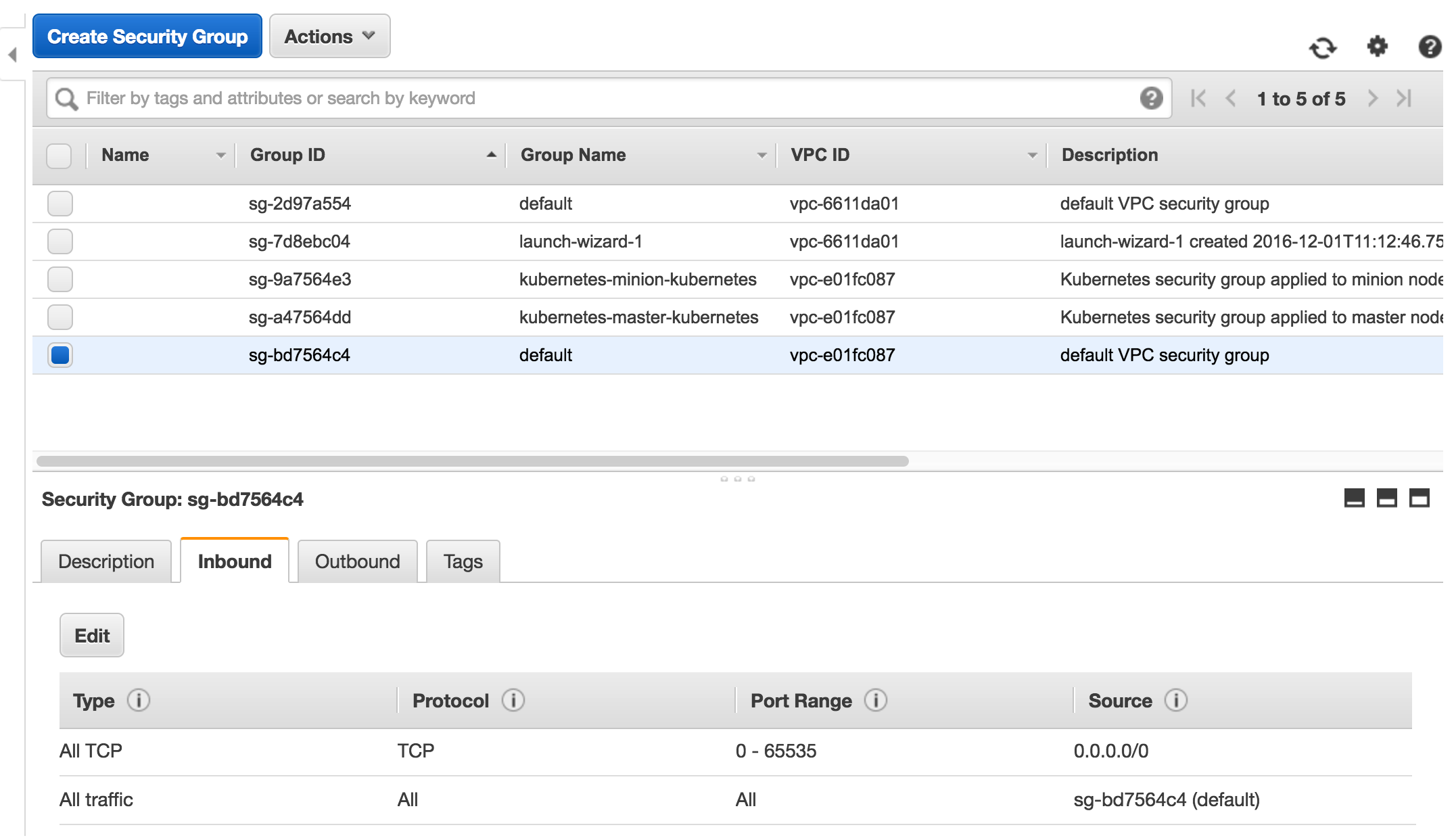

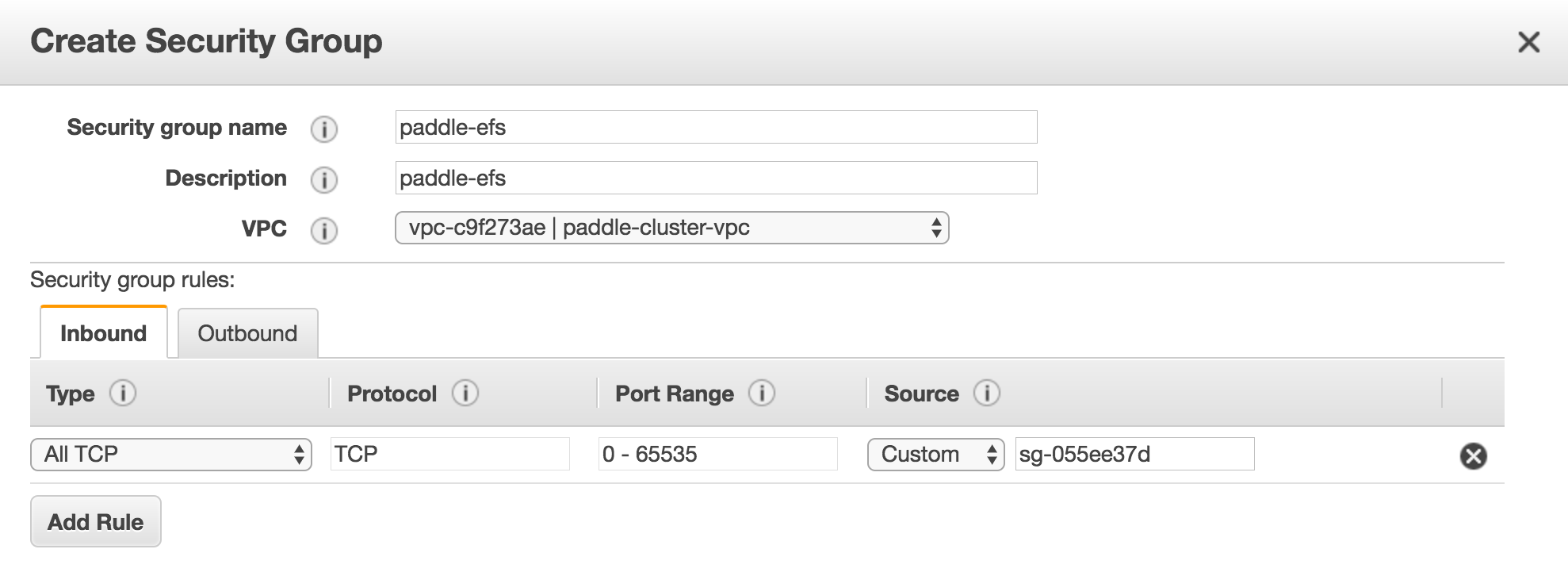

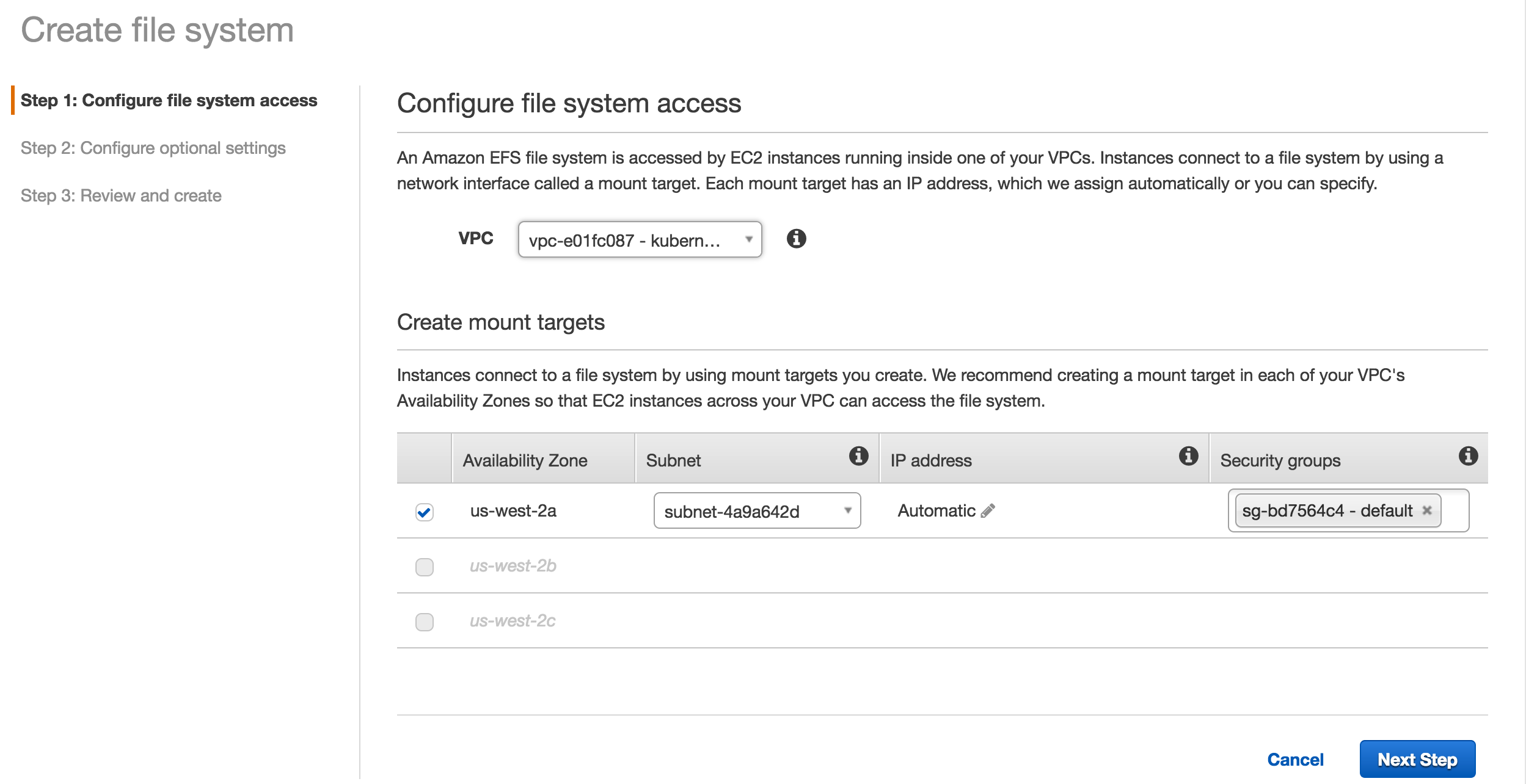

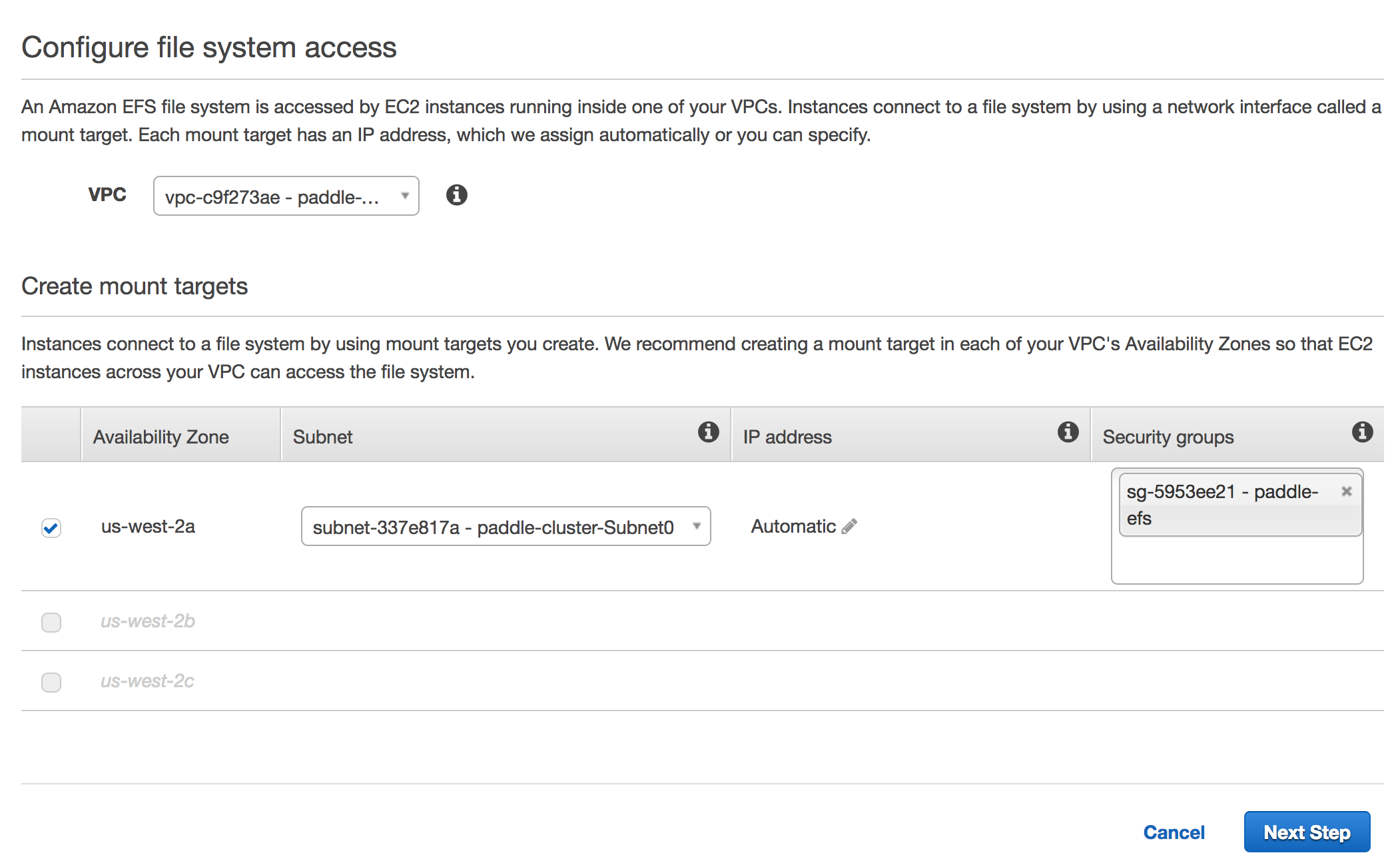

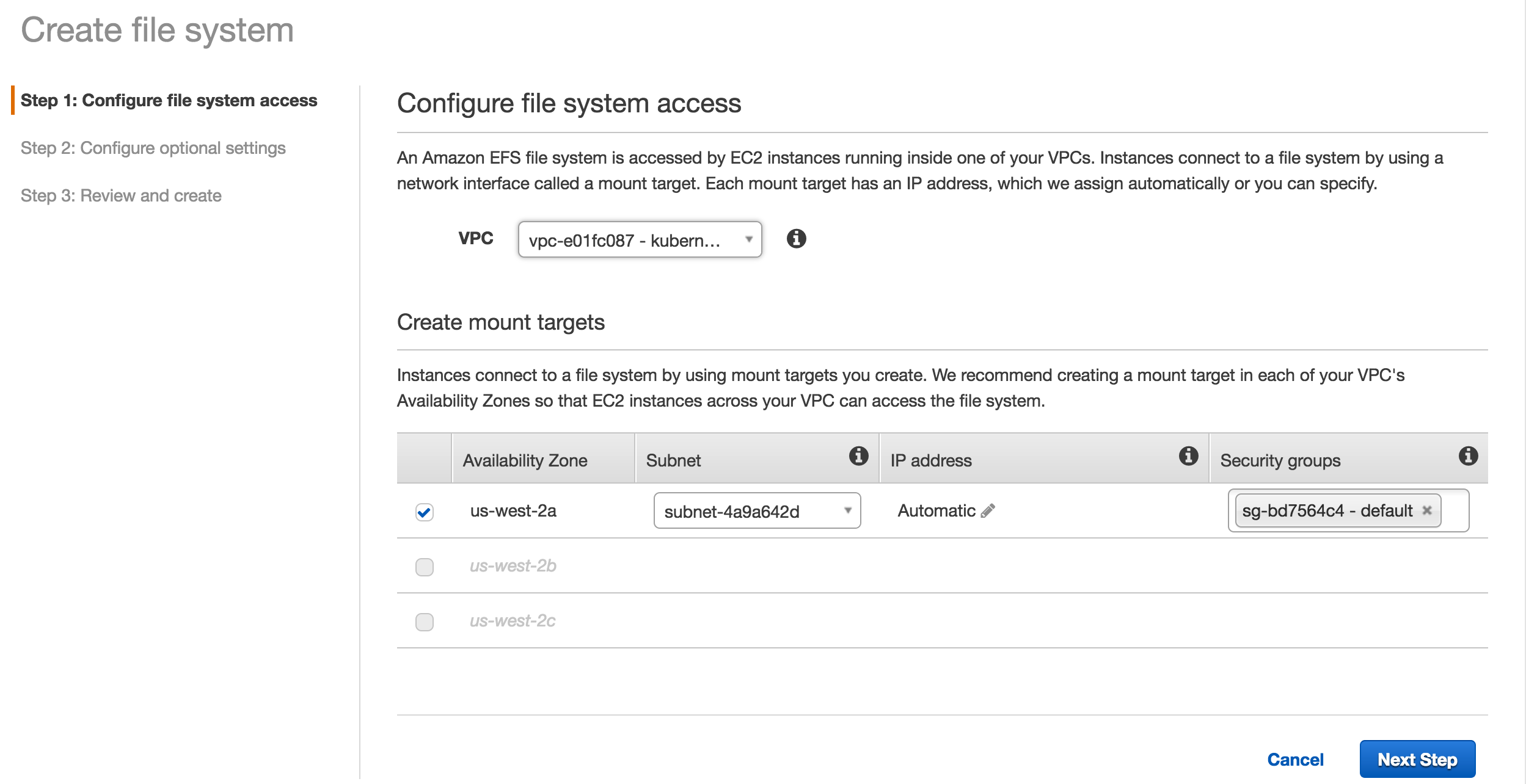

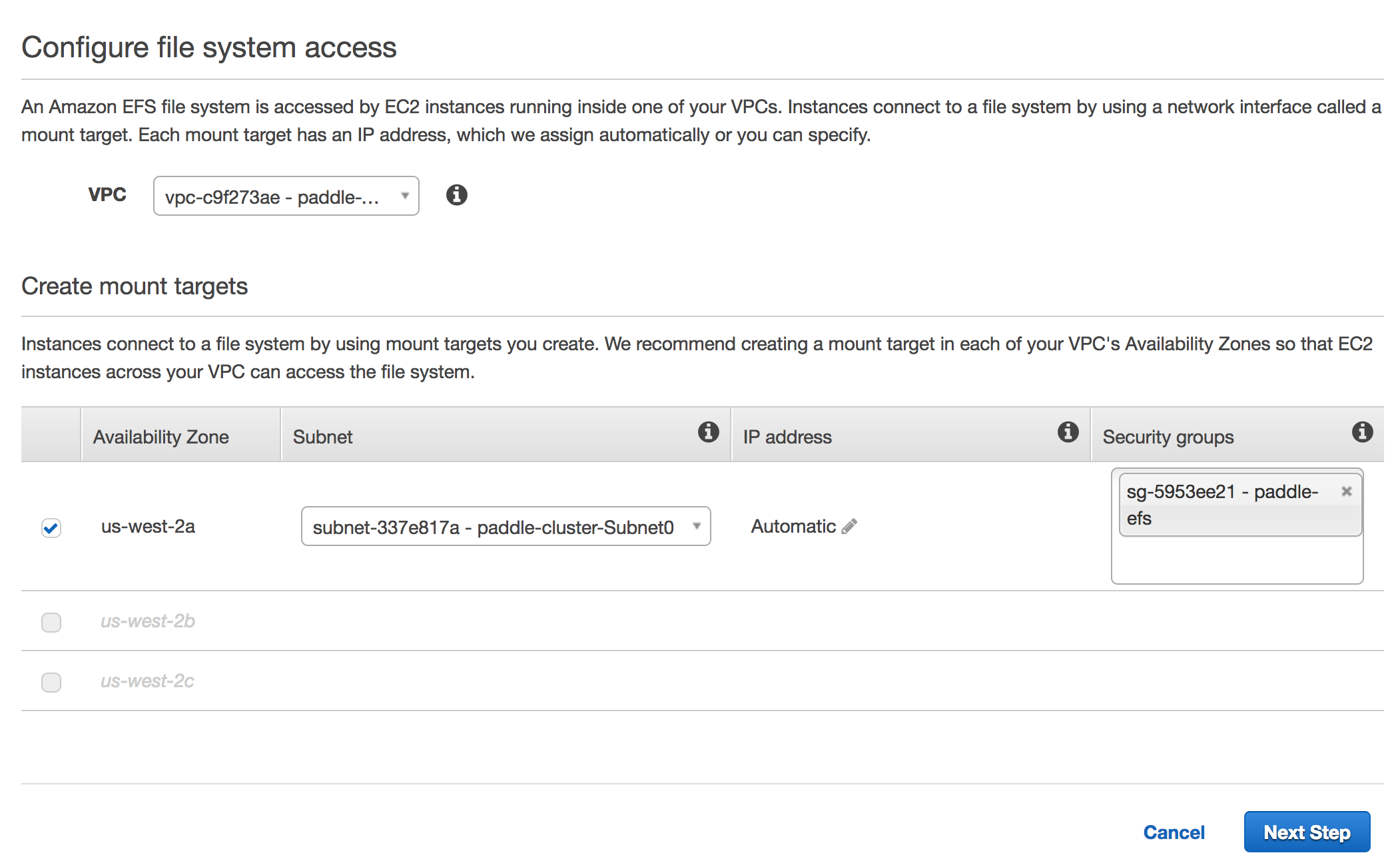

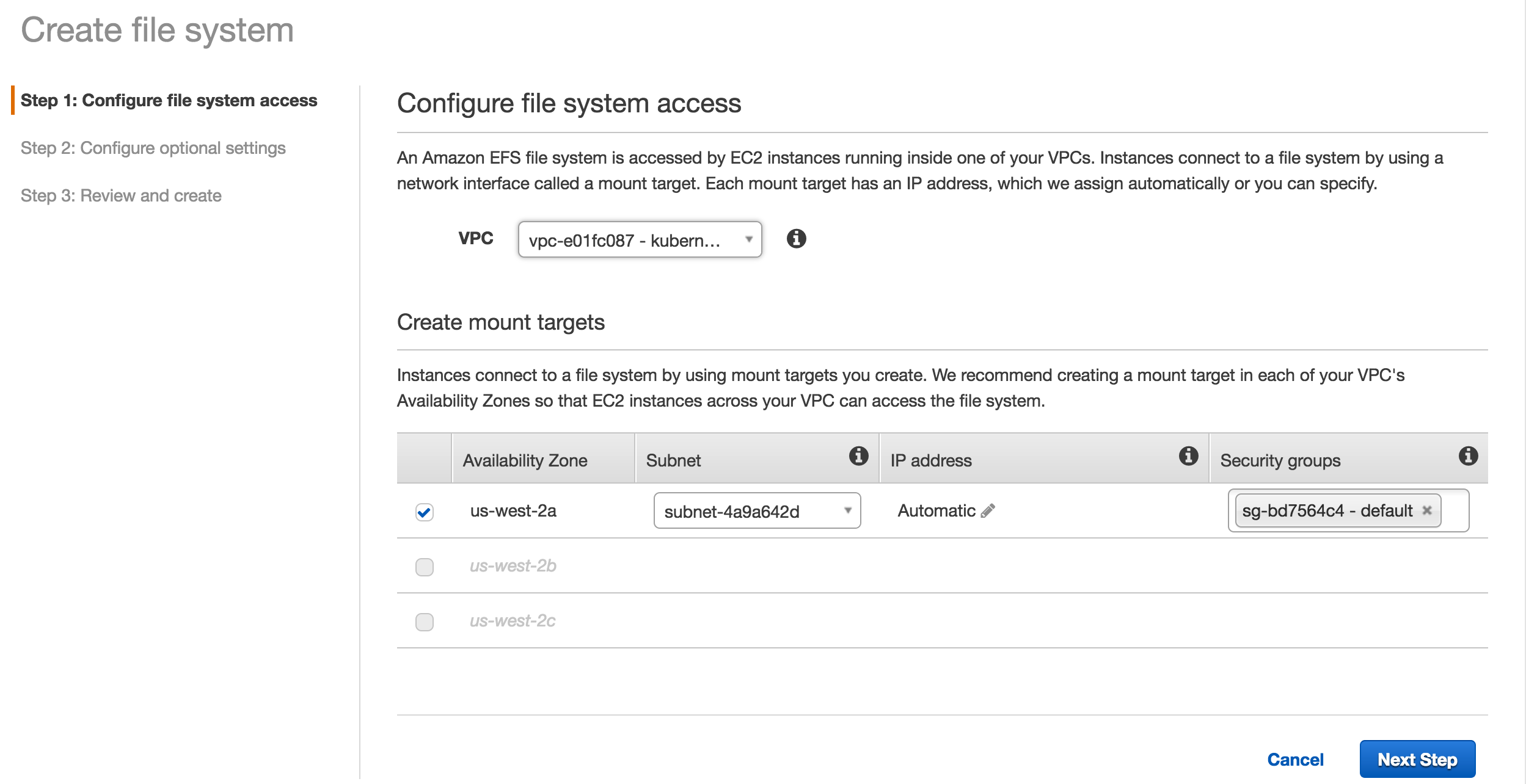

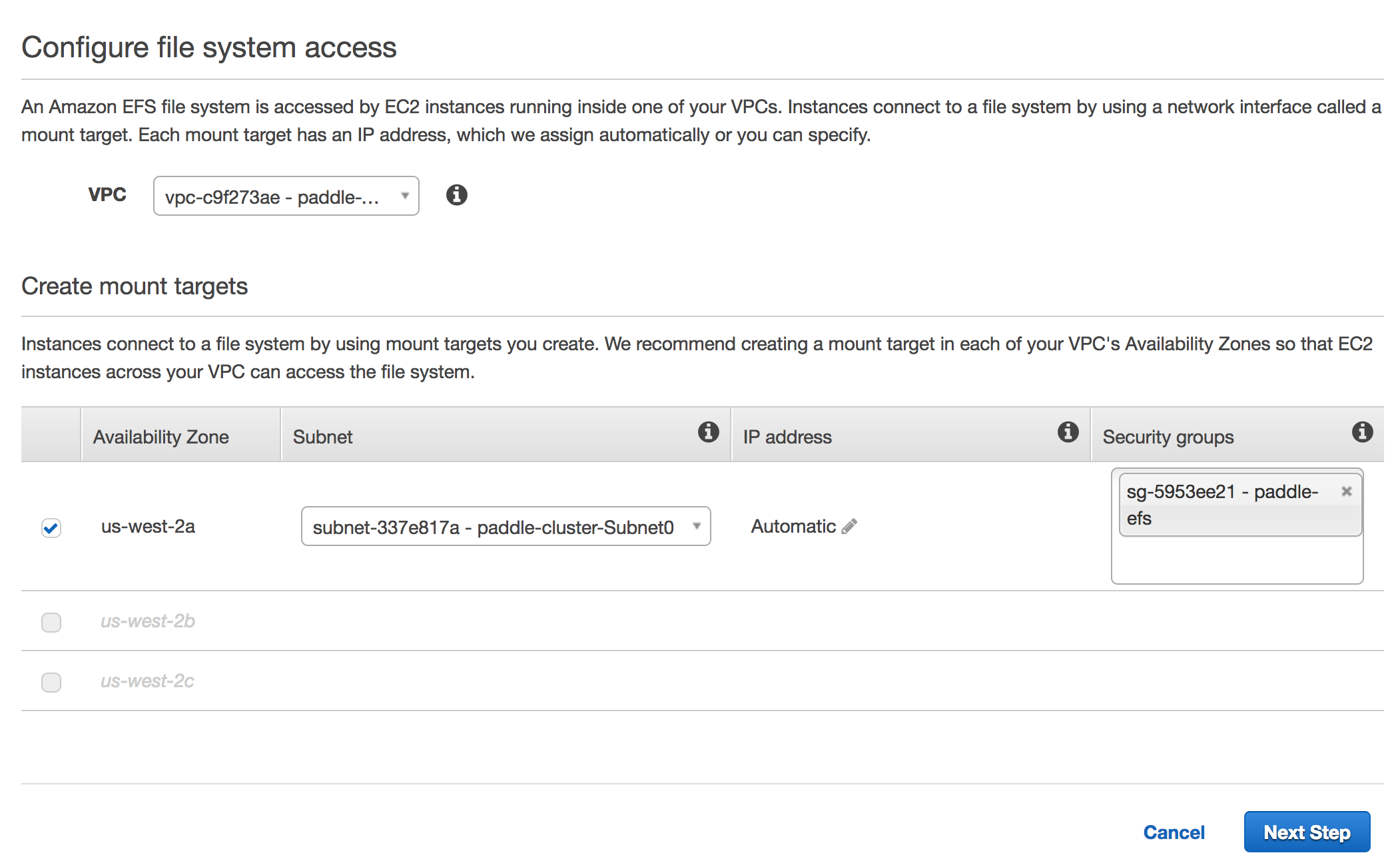

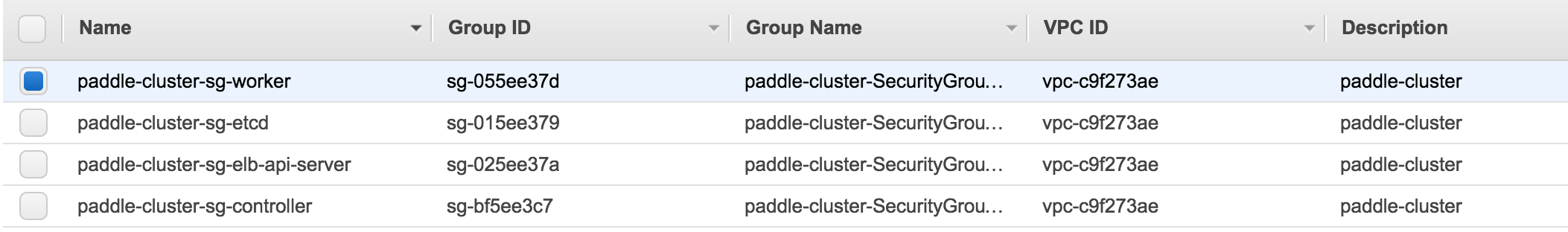

Merge pull request #1227 from helinwang/k8s_aws

paddle on aws with kubernetes tutorial now works

Showing

| W: | H:

| W: | H:

| W: | H:

| W: | H:

87.1 KB

paddle on aws with kubernetes tutorial now works

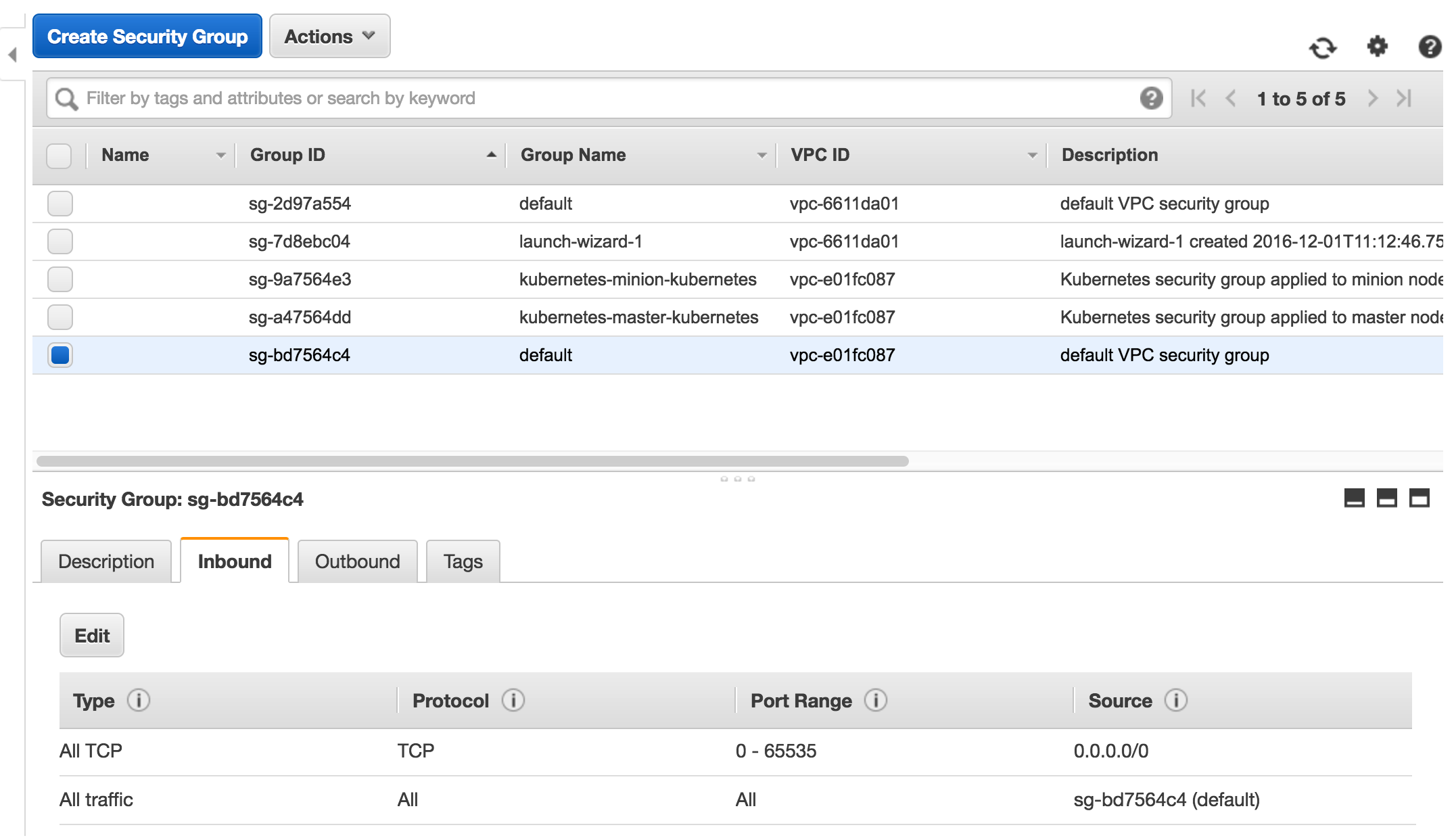

231.9 KB | W: | H:

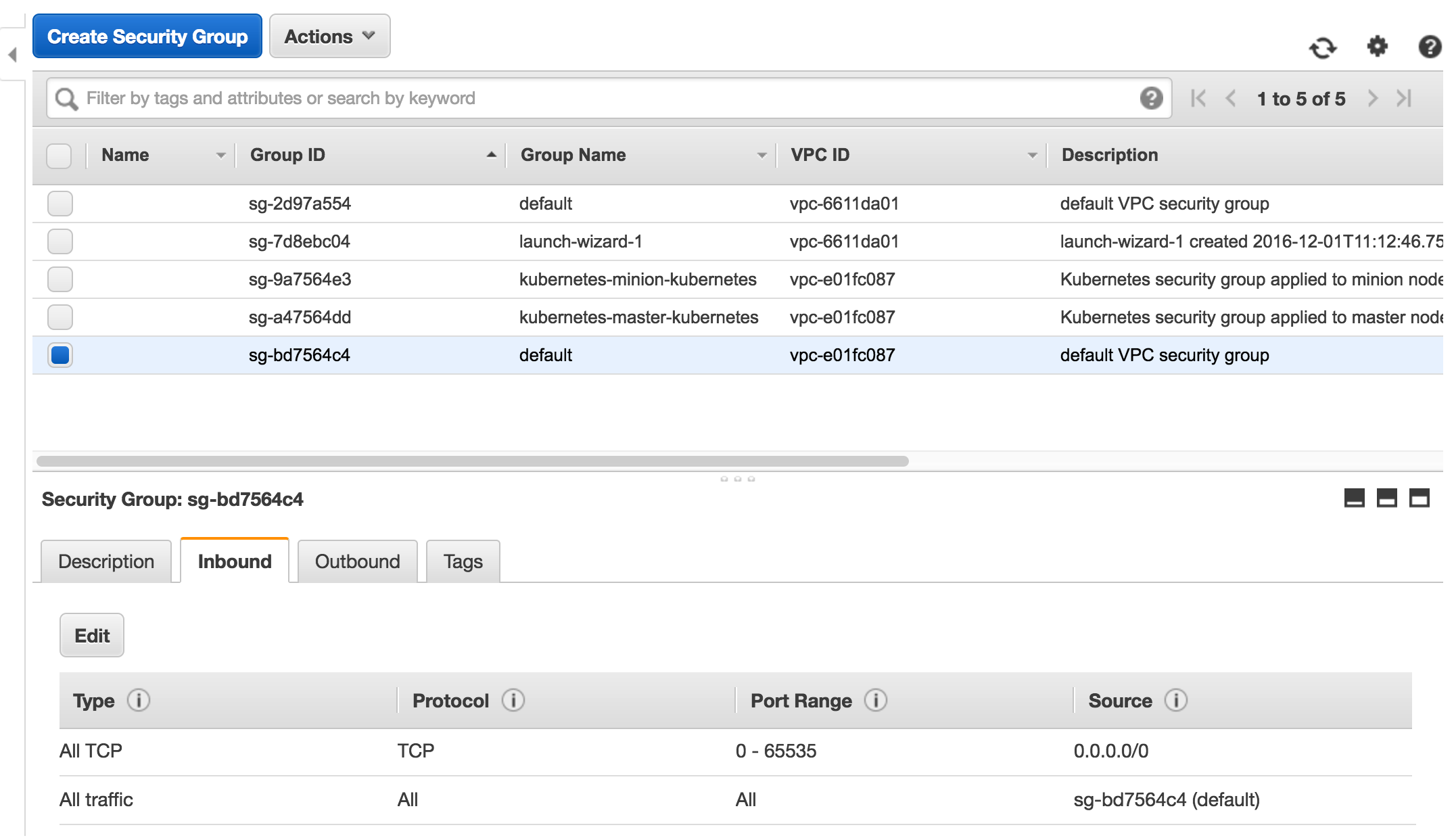

116.2 KB | W: | H:

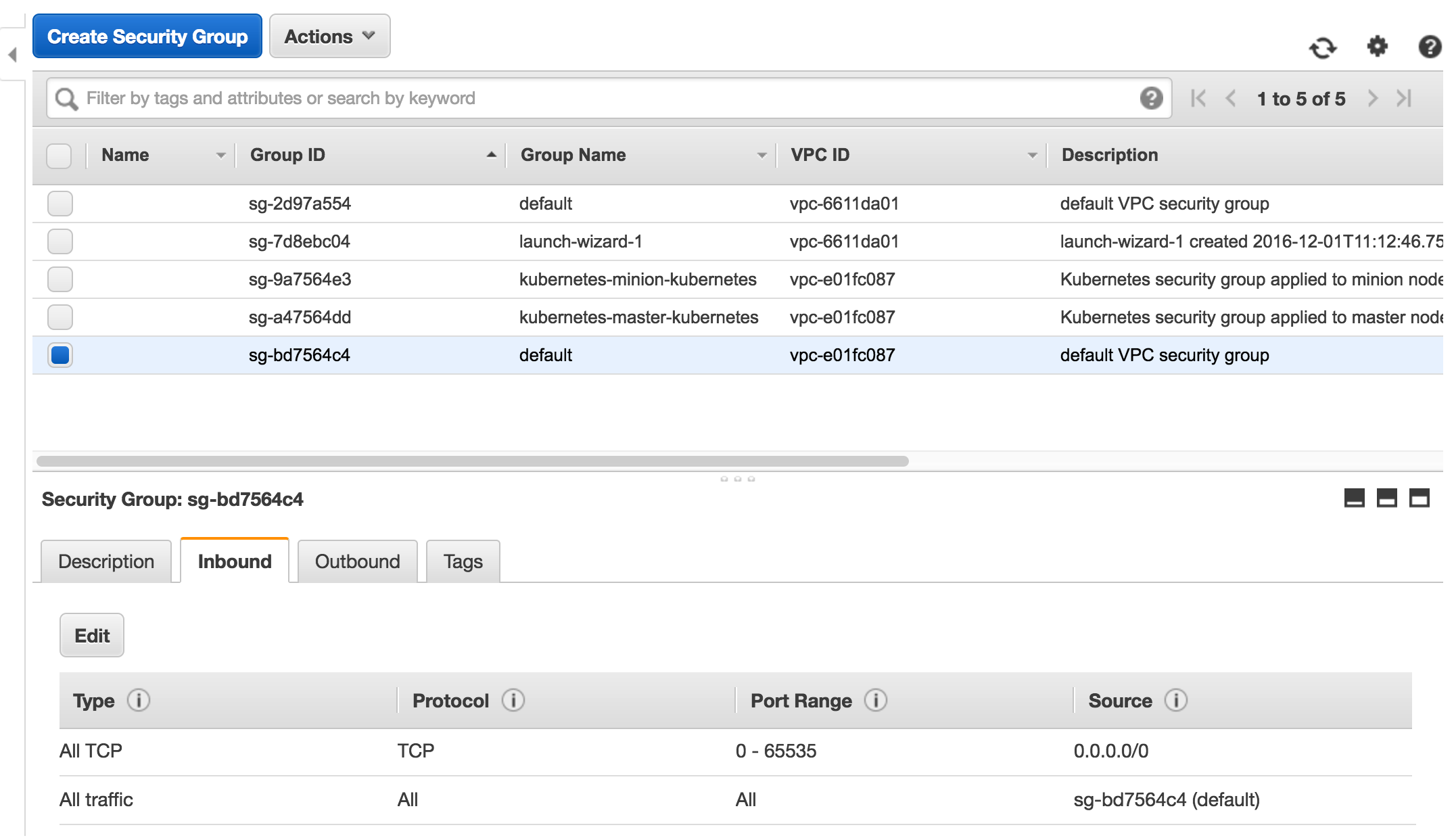

244.5 KB | W: | H:

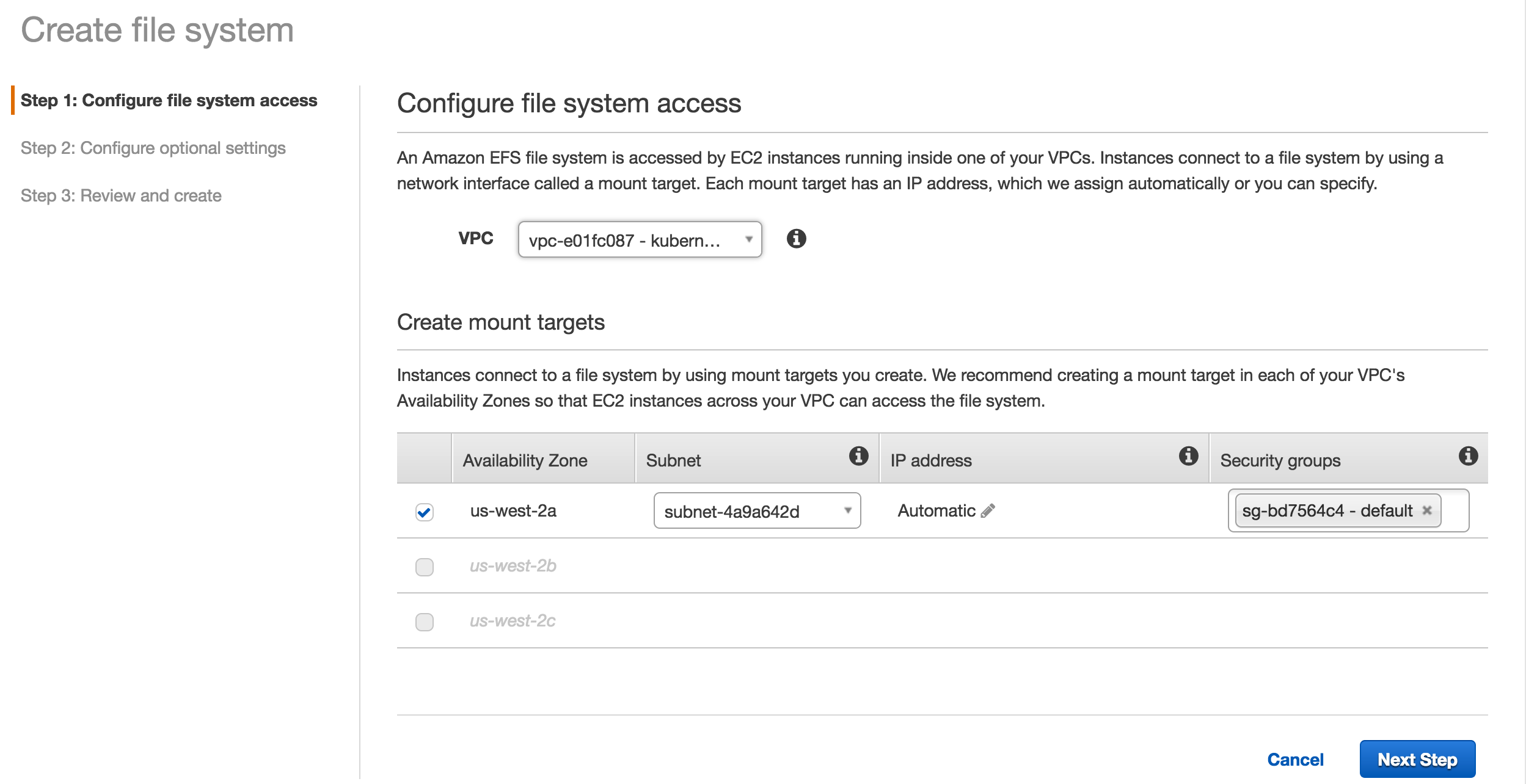

236.1 KB | W: | H:

87.1 KB