Merge branch 'develop' of github.com:PaddlePaddle/Paddle into auto_grwon_sparse_table

Showing

doc/mobile/CMakeLists.txt

0 → 100644

doc/mobile/index_cn.rst

0 → 100644

doc/mobile/index_en.rst

0 → 100644

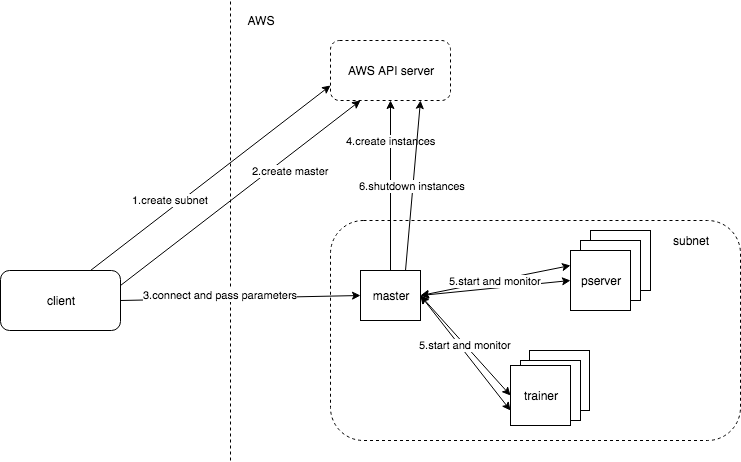

tools/aws_benchmarking/README.md

0 → 100644

39.8 KB

此差异已折叠。