remove conflict

Showing

文件已移动

文件已添加

108.4 KB

文件已添加

32.8 KB

doc/design/kernel_hint_design.md

0 → 100644

doc/design/mkl/mkl_packed.md

0 → 100644

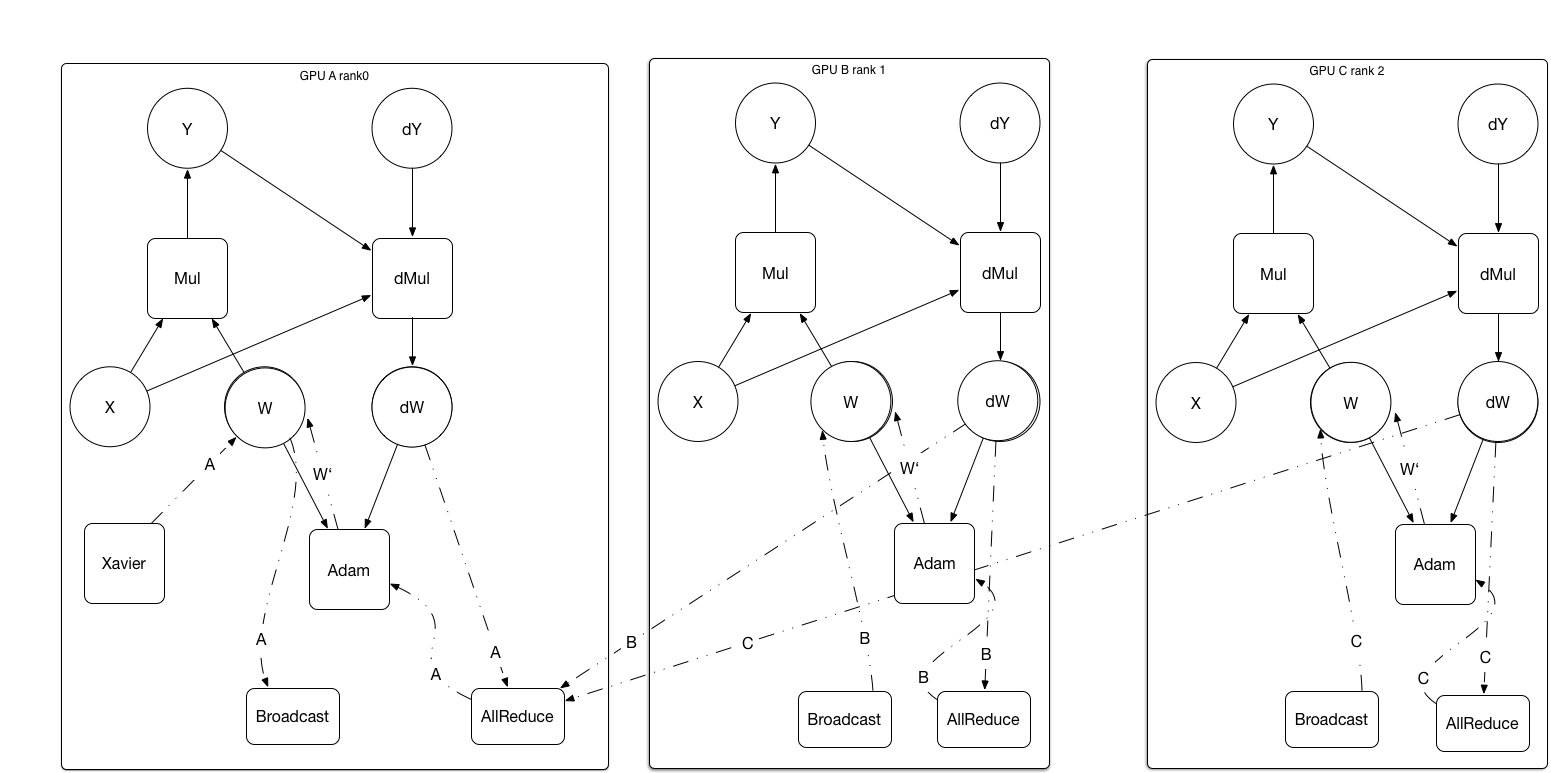

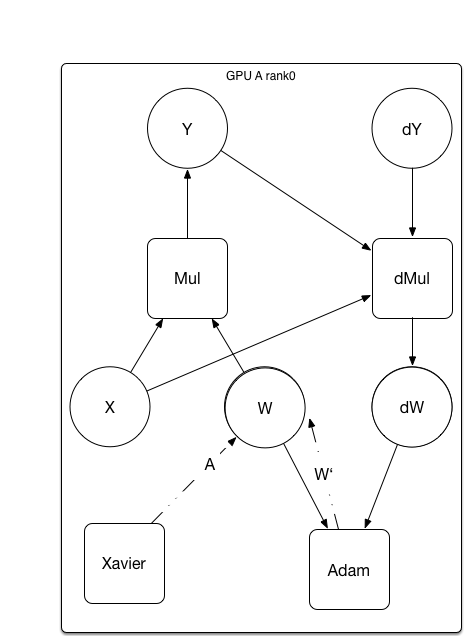

doc/design/paddle_nccl.md

0 → 100644

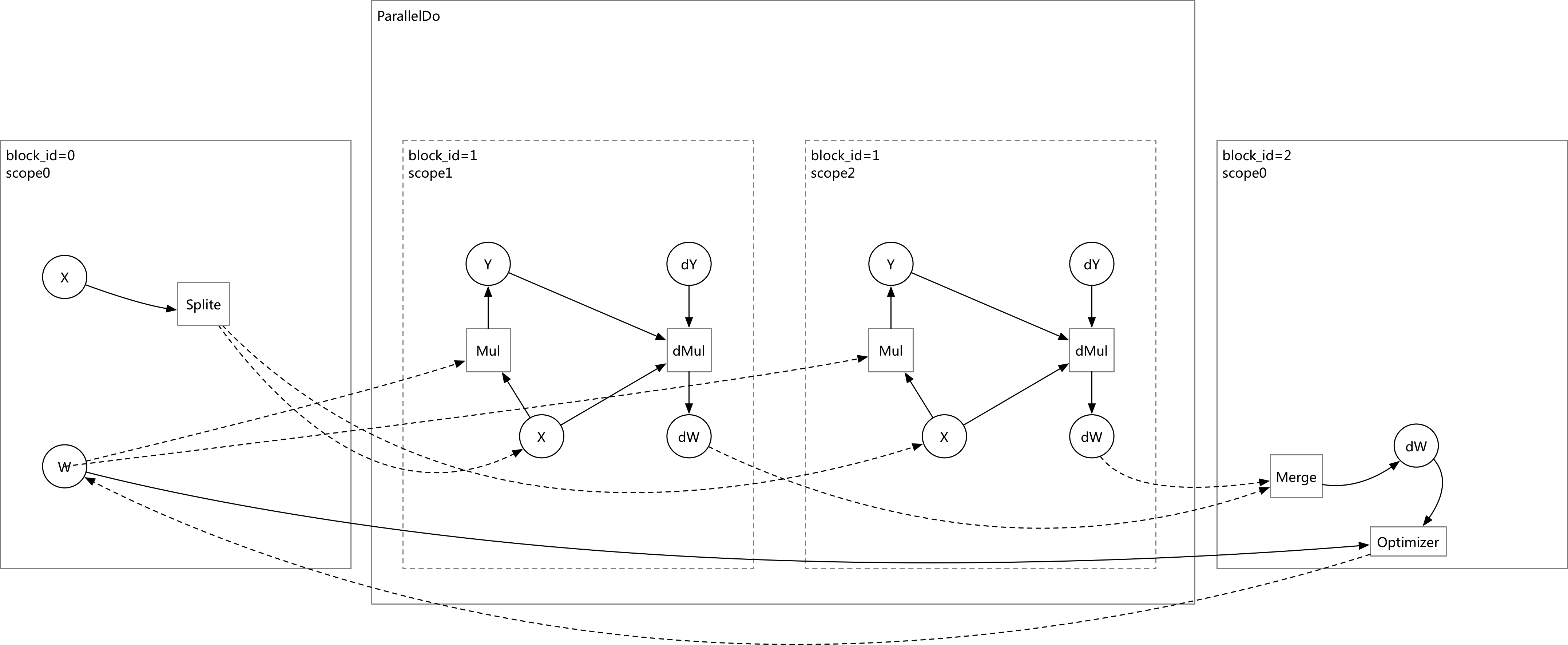

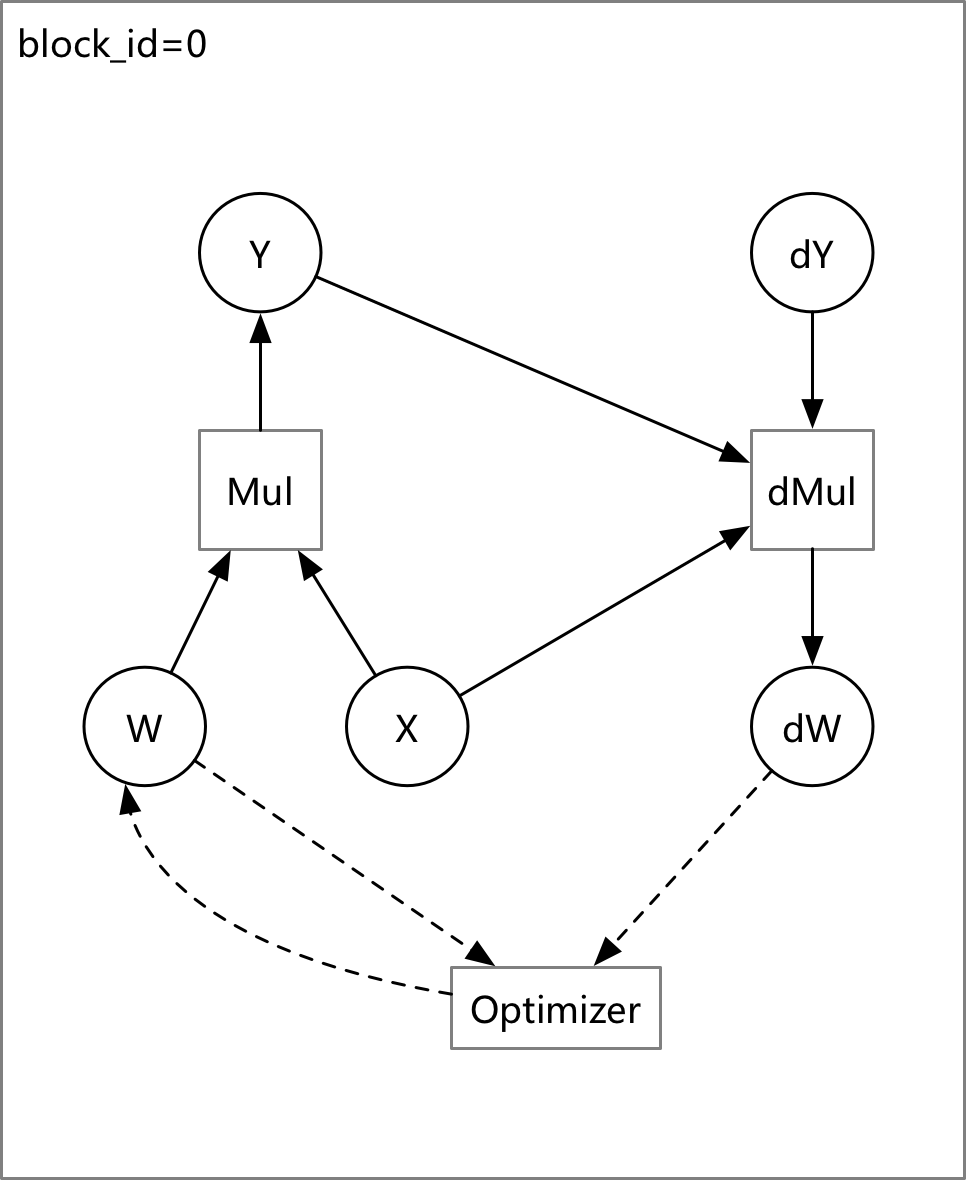

doc/design/refactor/multi_cpu.md

0 → 100644

文件已添加

350.4 KB

76.3 KB

doc/design/switch_kernel.md

0 → 100644

文件已移动

文件已移动

文件已移动

420.9 KB

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

501.1 KB

paddle/framework/init.cc

0 → 100644

paddle/framework/init.h

0 → 100644

paddle/framework/init_test.cc

0 → 100644

文件已移动

文件已移动

文件已移动

paddle/operators/spp_op.cc

0 → 100644

paddle/operators/spp_op.cu.cc

0 → 100644

paddle/operators/spp_op.h

0 → 100644

paddle/pybind/const_value.cc

0 → 100644

paddle/pybind/const_value.h

0 → 100644

python/paddle/v2/fluid/clip.py

0 → 100644