Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into average_model

Showing

文件已删除

21.4 KB

文件已删除

24.2 KB

160.0 KB

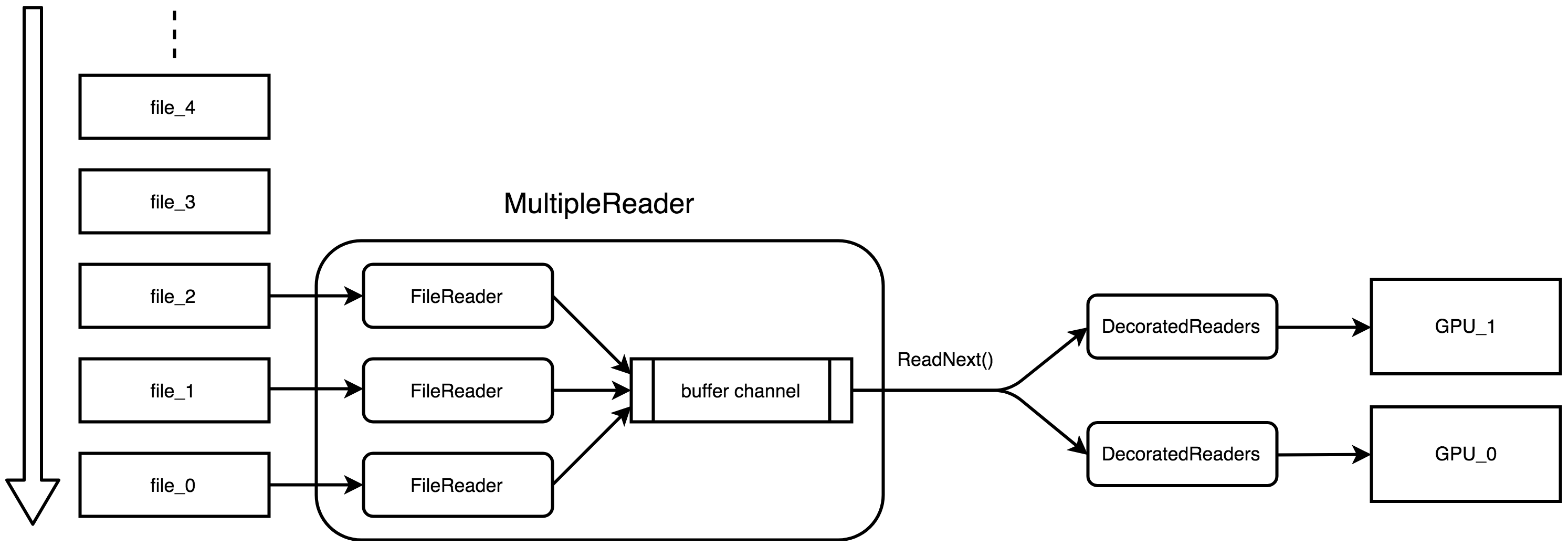

doc/design/images/readers.png

0 → 100644

347.4 KB

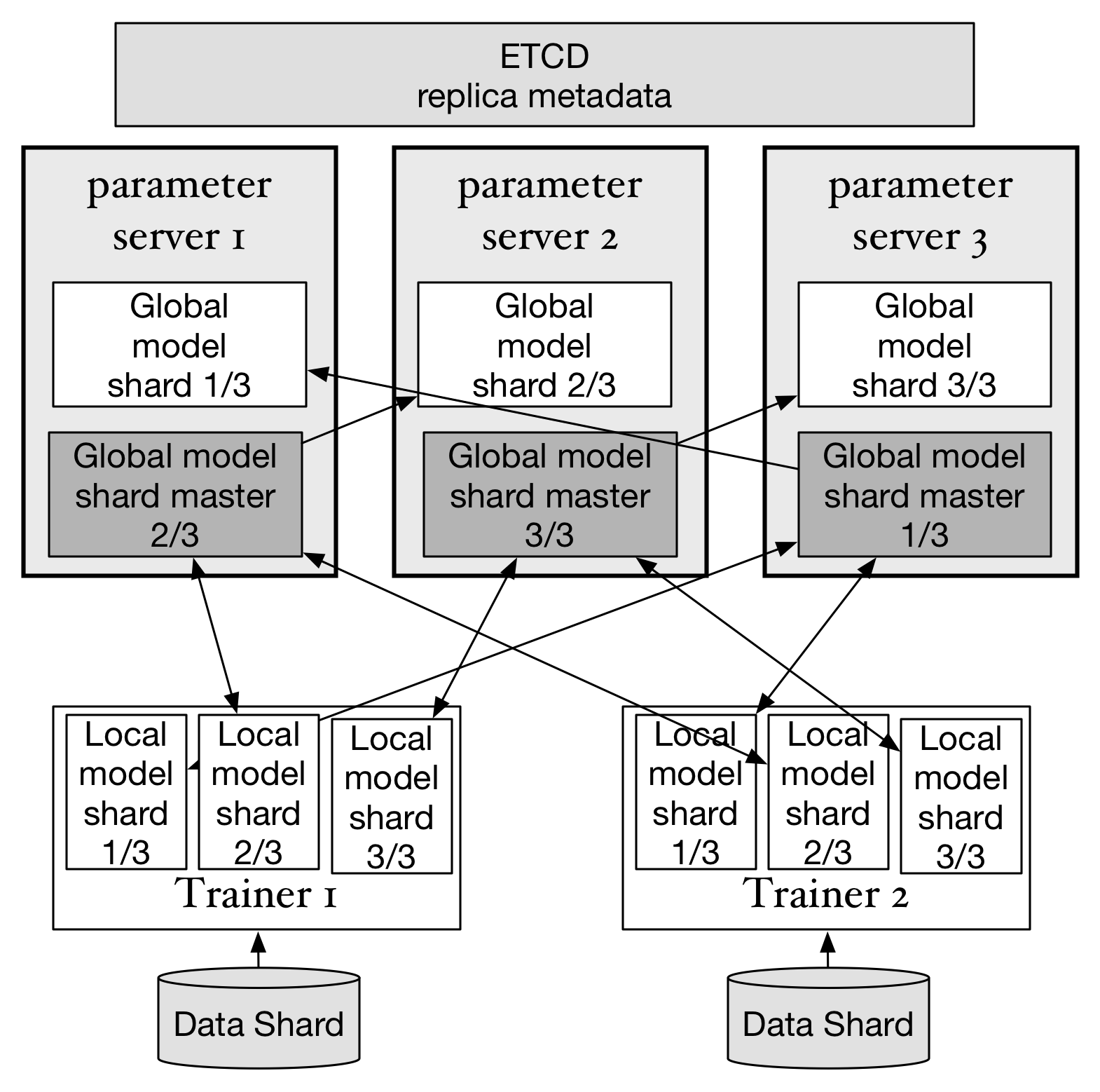

doc/design/images/replica.png

已删除

100644 → 0

174.9 KB

48.0 KB

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动